The best BI ETL tools: top 10 ETL tools

As Passionned Group, we conduct thorough research on the functionality of ETL software and the market for data integration solutions. If you’re looking for the best ETL tool for your situation, our ETL guide will help you shortlist immediately. Whether it’s commercial ETL tooling or open source ETL tools. Our guide showcases Oracle’s ETL tools, the SAS ETL tool, the Microsoft ETL tool (SSIS), Informatica’s ETL tools, data warehousing ETL tools and many other data integration solutions.

Take a look at the main ETL software tools that are currently available on the market and have a decent track record. Want to compare these ETL tooling? Then download our ETL & Data Integration Guide 2024 here.

What is ETL and what does this abbreviation stand for?

The abbreviation ETL stands for Extraction, Transformation and Loading of data. We explain this ETL meaning by explaining the individual terms:

- Extraction: during this step you will select, filter and transfer data from your sources to a temporary storage location. This can be in the internal memory of the computer or on the so-called hard disk. An example of an extraction is selecting the orders and order lines that were new yesterday. During the extraction you often apply the principle of ‘change data capture’, so that you only select data that has been added to the database with respect to the previous ‘load’.

- Transformation: here you will transform the data to a structure that is better suited for analysis of large amounts of data. For example, you will go from a highly normalized structure to a denormalized structure such as a dimensional model with a star schema or snowflake schema.

- Load: in this final step, you will permanently store the transformed ETL data in a database or file. This can be a data warehouse, a CRM system where you want to be able to view customer data from other systems or a file that an analyst or controller, for example, wants to use to perform an analysis.

The ETL data integration can take place via and in the cloud (cloud ETL) or simply on an ETL server in your own data center. With “ETL as a service” or “SaaS ETL” you make data integration available on demand. You then only pay for usage. The amount of the bill you get depends on the size of the data, the number of users or the duration of the ETL process. What is the relationship between ETL and BI? With BI you want to be able to monitor and analyze data quickly via reports, dashboards or algorithms and then you need a solid data infrastructure that you can create with ETL.

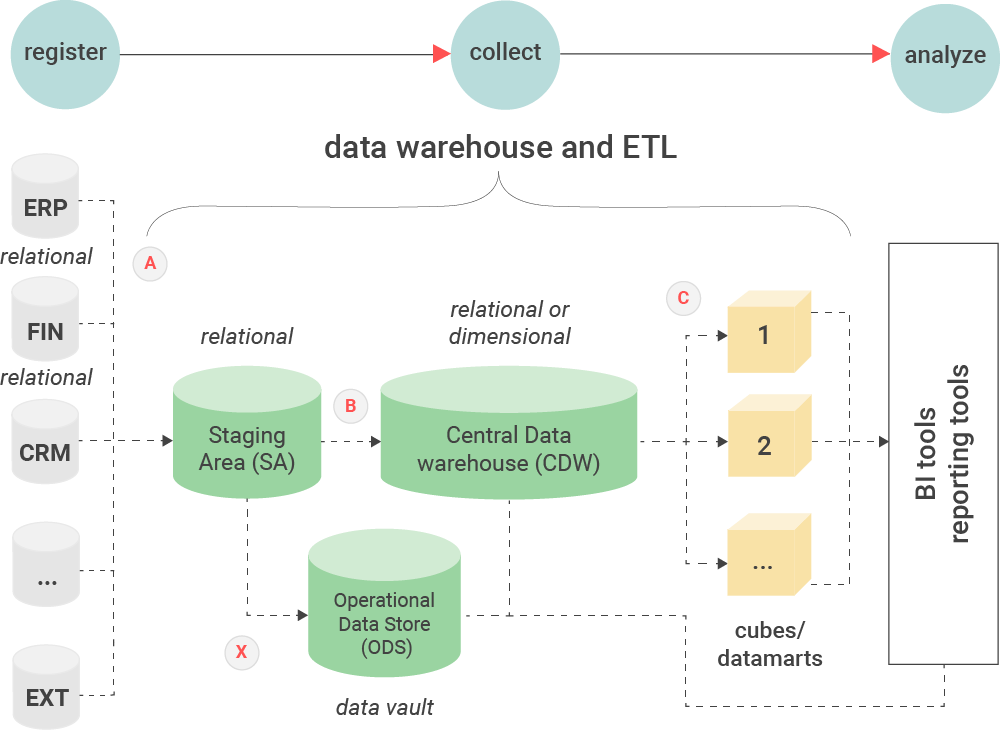

The complete ETL process in one visual

As explained earlier, every ETL process consists of three major steps: extraction, transformation and loading. Often not one process is needed but several. For example, to update a data warehouse every night you might need two hundred ETL processes. Each process is responsible for a piece of the total transformation you want to achieve. If the overall ETL process takes too long, it won’t be ready in the morning and the numbers on your dashboard won’t be updated. So the key is to make sure your ETL can run quickly and is done on time. A maximum of 2 hours is an acceptable limit here. If it takes longer, then we advise you to switch to real-time ETL.

Figure 1: The ETL process and associated architecture.

Figure 1: The ETL process and associated architecture.

In addition to the extraction, transformation and loading steps, you can also perform data quality checks. The best time to perform this check is between the extraction and transformation steps. Do you still have questions about this ETL process explanation? Then contact us here for further clarification or independent ETL advice.

ETL management: data governance from a to z

The management of all ETL in an organization is done by a data manager or ETL manager. We define ETL management as the responsibility for setting up data definitions and transformations and for managing them. The manager is assisted by the (freelance) ETL developer, the data architect, the database administrator, the metadata manager and the data custodian. Together, these ETL experts ensure that the ETL processes are well documented and can therefore be properly maintained. At the end of the page you can find frequently asked questions that may be further important in ETL management.

Download the ETL & Data Integration Guide™ 2024 Quickly unlock a lot of directly applicable knowledge about ETL, big data and data integration and quickly shortlist the ETL tools that meet your criteria. A 100% independent study that includes up-to-date insights, irrefutable facts and 500 charts.

Quickly unlock a lot of directly applicable knowledge about ETL, big data and data integration and quickly shortlist the ETL tools that meet your criteria. A 100% independent study that includes up-to-date insights, irrefutable facts and 500 charts.

What are Big Data ETL tools?

Big Data and ETL have had to get used to each other. The ETL tools of ten years ago were not, or very poorly, able to process unstructured data such as videos, audio clips or sensor data. Today, almost all ETL tools can process big data and store it in a data lake (source: the ETL & Data Integration Guide 2024). ETL can also use a data lake as a source to fill a table with the number of tweets a company sends per day, for example. Combining (real-time) structured data from a data warehouse and unstructured data from a data lake still remains a big challenge. Only highly experienced ETL experts can help you with that. Data integration solutions that can correctly process and combine both types of data are labeled ‘Big Data ETL tools’ in our guide. Big here does not only mean large amounts of data, but also complex data such as videos, documents and sound clips.

Order our online ETL guide here

In our ETL & Data Integration Guide you will not only get to know all ETL tools, but you can also create your own ETL matrix with the self-service analysis module. In addition, our ETL Guide includes a training module that helps you understand the most important concepts in ETL and data integration. Order here.

Don’t overcomplicate your ETL

ETL is a complex business and in no-time you will be stuck if you don’t understand the concept completely. Our experienced ETL specialists will be happy to help you set up a future-proof data infrastructure and ETL processes. Contact us here for hiring an ETL expert and request a quote.

About Passionned Group

Passionned Group is the ETL specialist that helps organizations realize a data-driven organization. Our passionate ETL specialists are happy to help you with issues around data integration, BI and AI.

Passionned Group is the ETL specialist that helps organizations realize a data-driven organization. Our passionate ETL specialists are happy to help you with issues around data integration, BI and AI.