The list of ETL tools

Below you will find the names of all important ETL tools, click on an ETL tool for more information. Or order and consult our ETL & Data Integration Guide™ 2022 and quickly make an objective comparison between all these parties. The list has been compiled in order of popularity and the scores of the various data integration solutions are related to the ETL tool that scores best in our research based on our criteria. So when a tool only achieves 2 stars, it does not automatically mean that it is a wrong ETL tool. Passionned Group is 100% independent, so we are in no way affiliated with the ETL software suppliers.

1. Actian

The most well-known products in the area of ETL & Data Integration of the company Actian are Actian Avalanche, Actian Dataconnect, Actian Dataflow, Actian Data Integration and Actian Nosql Object Database. We analyzed and evaluated these ETL & Data Integration products in depth and meticulously. The ETL software from Actian can be characterized by good support on the following topics:

The most well-known products in the area of ETL & Data Integration of the company Actian are Actian Avalanche, Actian Dataconnect, Actian Dataflow, Actian Data Integration and Actian Nosql Object Database. We analyzed and evaluated these ETL & Data Integration products in depth and meticulously. The ETL software from Actian can be characterized by good support on the following topics:

- nosql

- windows

- data management

- data warehouse

- hadoop

- big data analytics

- ETL

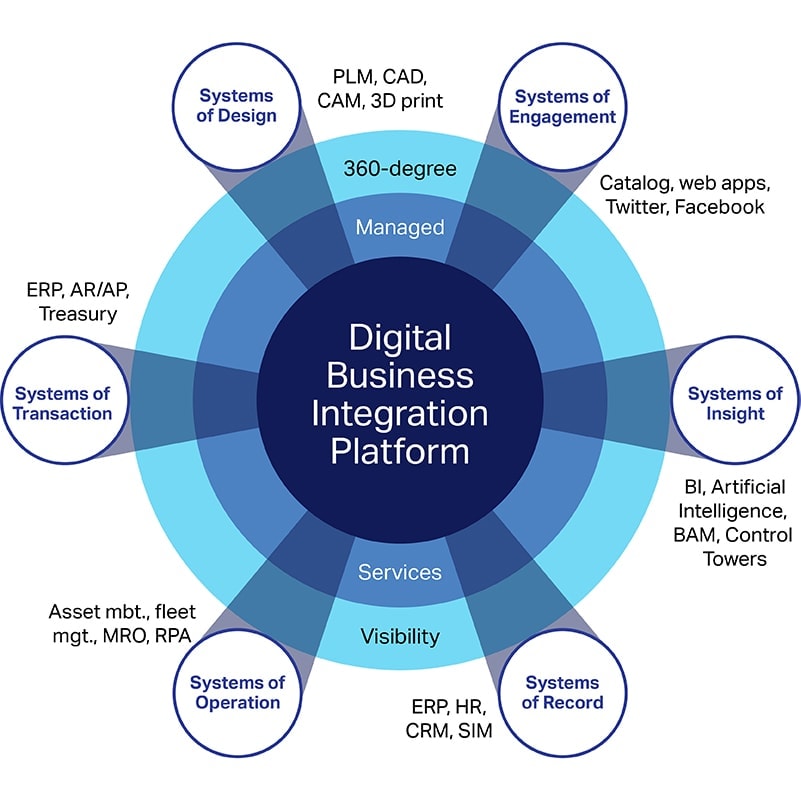

- data integration platform

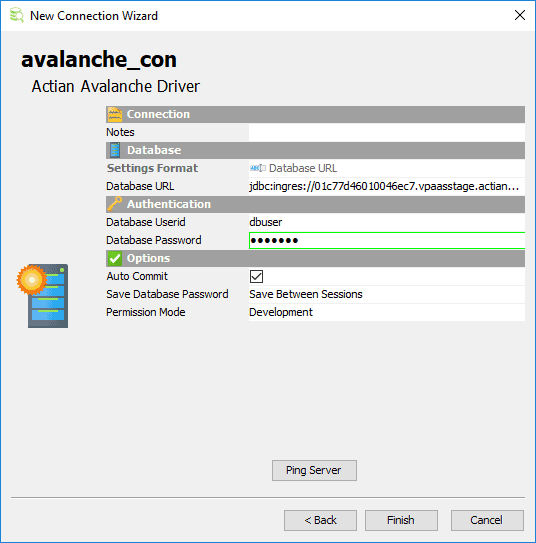

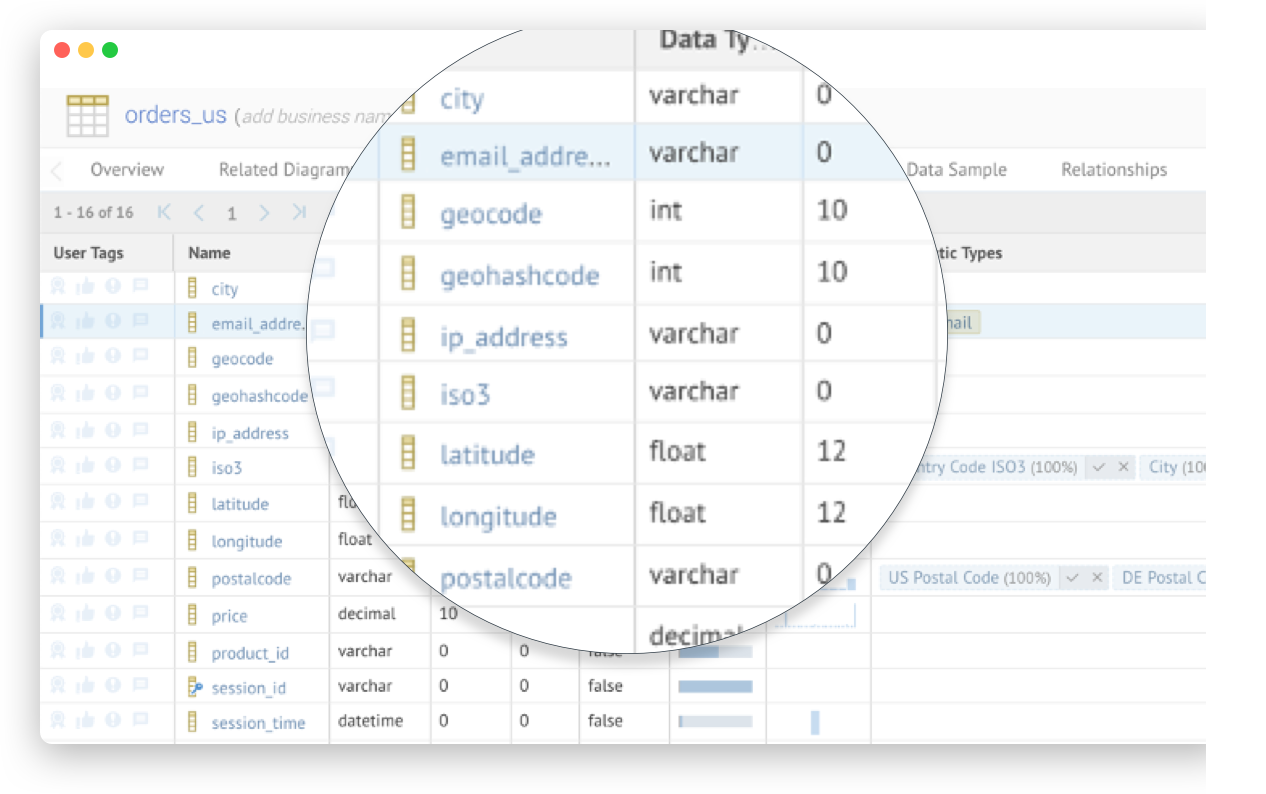

Figure 1: Actian Avalanche

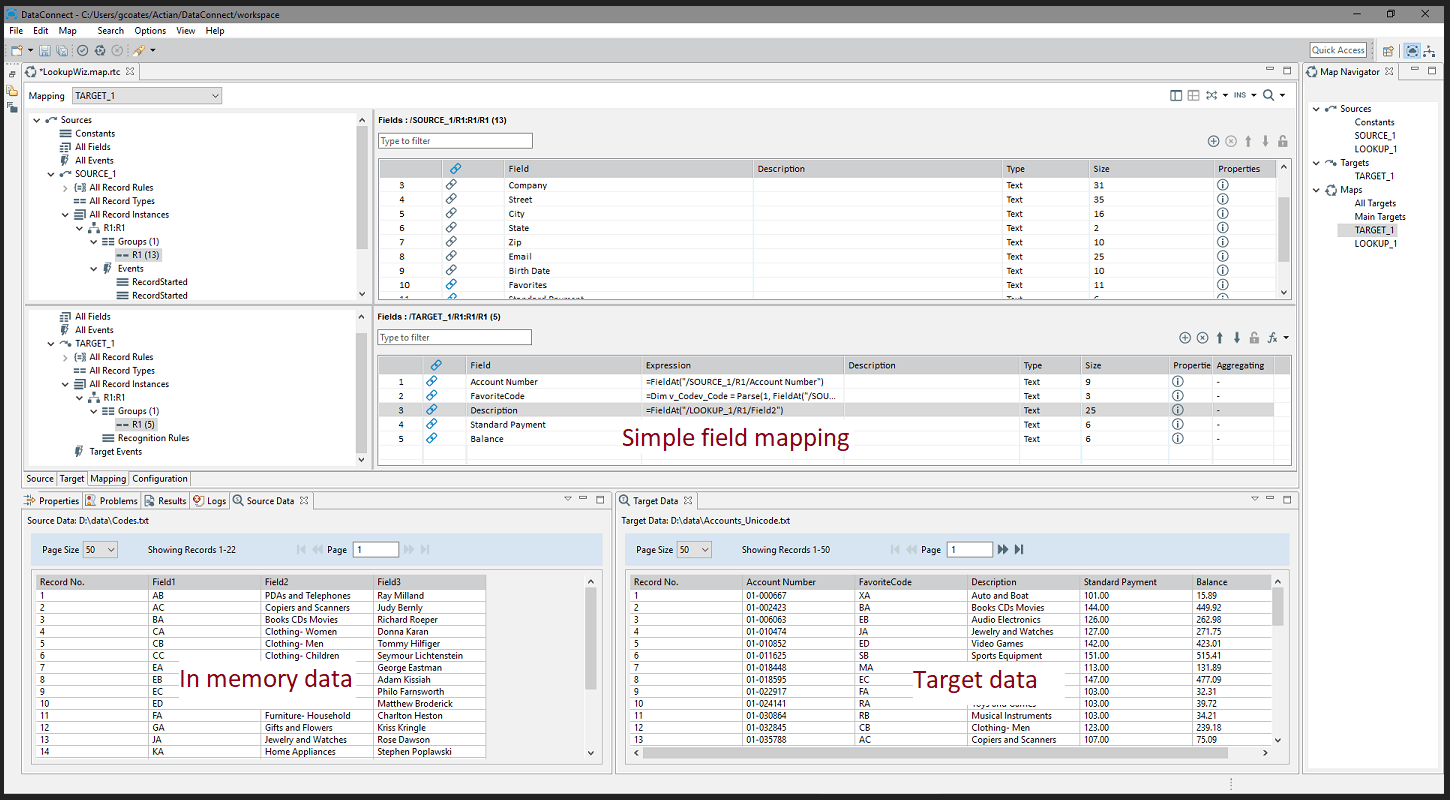

Figure 1: Actian Avalanche Figure 2: Actian Dataconnect

Figure 2: Actian Dataconnect Figure 3: Actian Dataflow

Figure 3: Actian Dataflow2. Adeptia

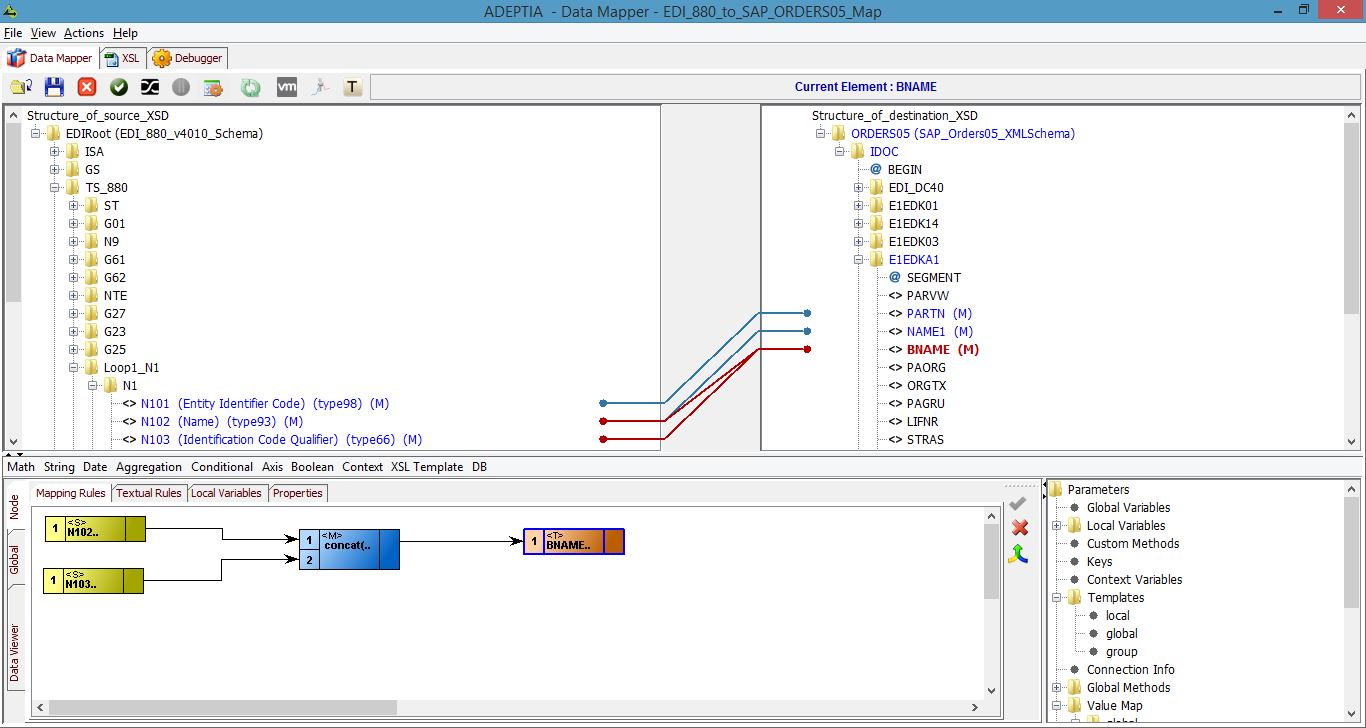

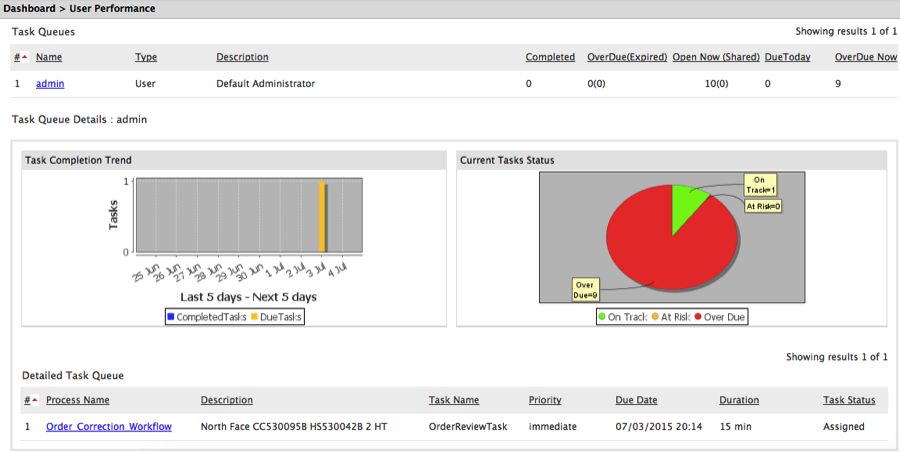

The most well-known products in the area of ETL & Data Integration of the company Adeptia are Adeptia Connect, Adeptia Suite, Adeptia Integration and Adeptia Server. We analyzed and evaluated these ETL & Data Integration products in depth and meticulously. The BI ETL tools from Adeptia can be characterized by good support on the following topics:

The most well-known products in the area of ETL & Data Integration of the company Adeptia are Adeptia Connect, Adeptia Suite, Adeptia Integration and Adeptia Server. We analyzed and evaluated these ETL & Data Integration products in depth and meticulously. The BI ETL tools from Adeptia can be characterized by good support on the following topics:

- data integration

- ETL

- BLOB

- API

- self-service data integration

- SOAP

- authentication

- FTP

- data mapping

- JAR

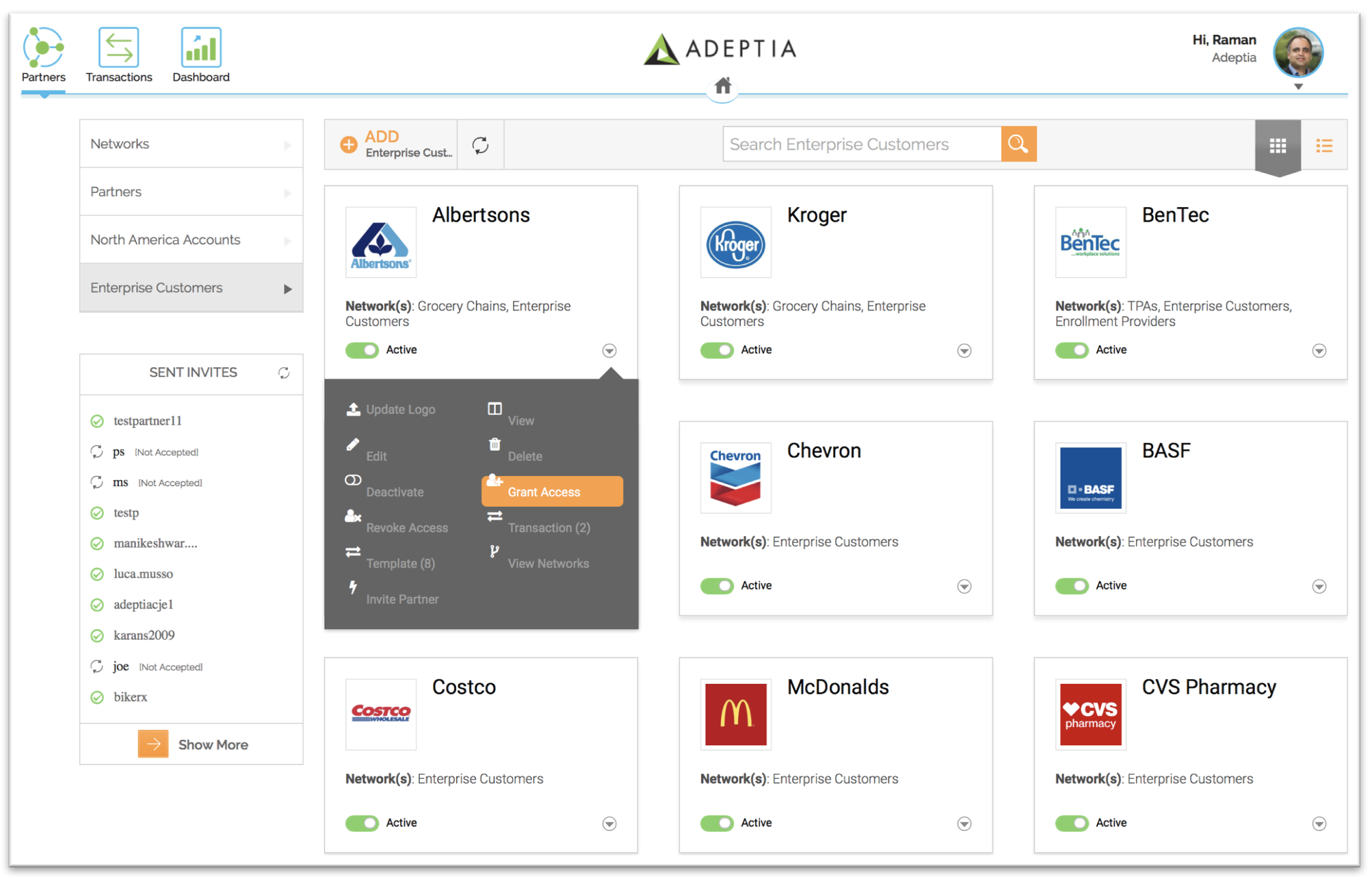

Figure 4: Adeptia Connect

Figure 4: Adeptia Connect Figure 5: Adeptia Suite

Figure 5: Adeptia Suite Figure 6: Adeptia Integration

Figure 6: Adeptia Integration3. Astera

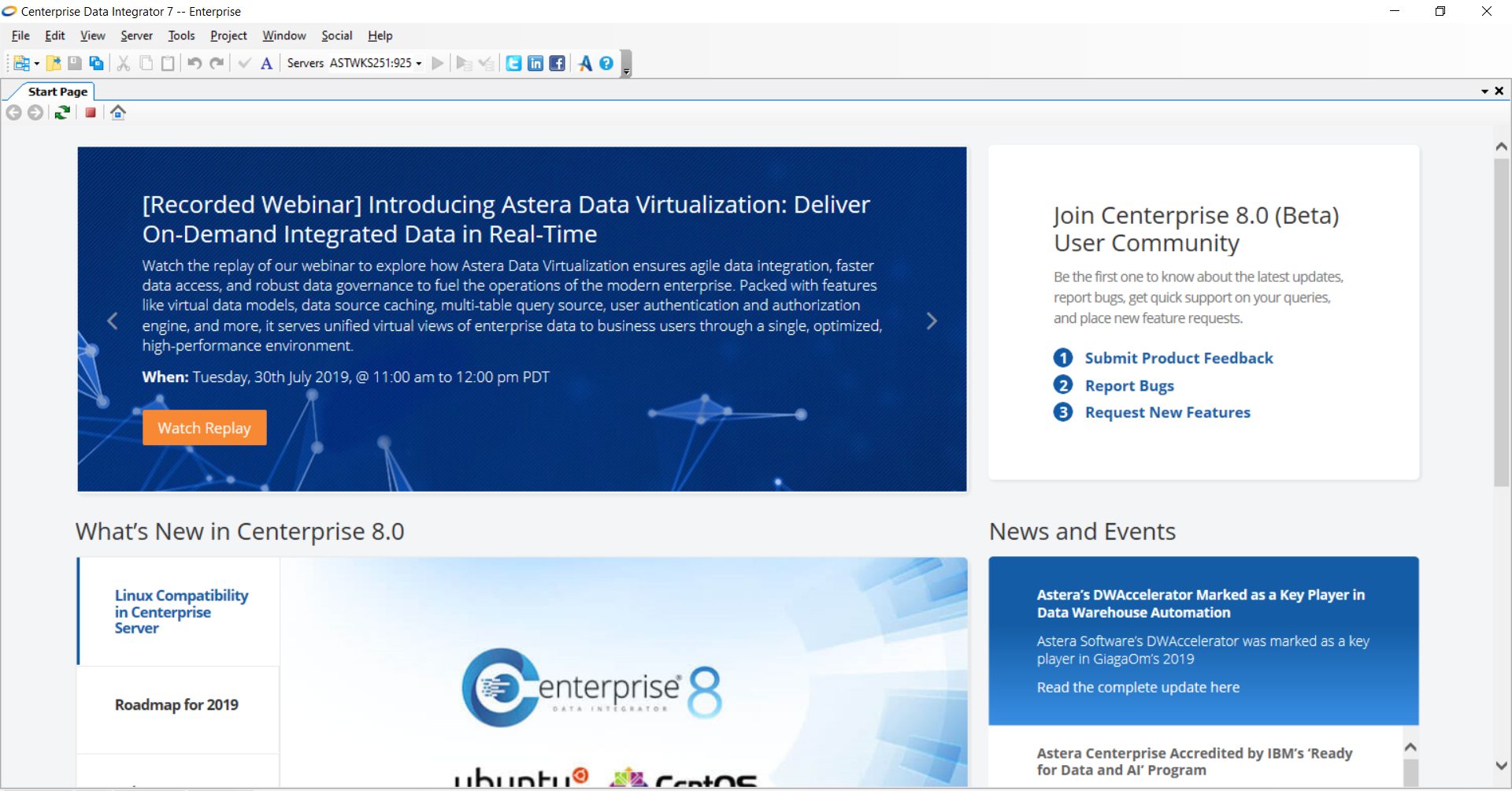

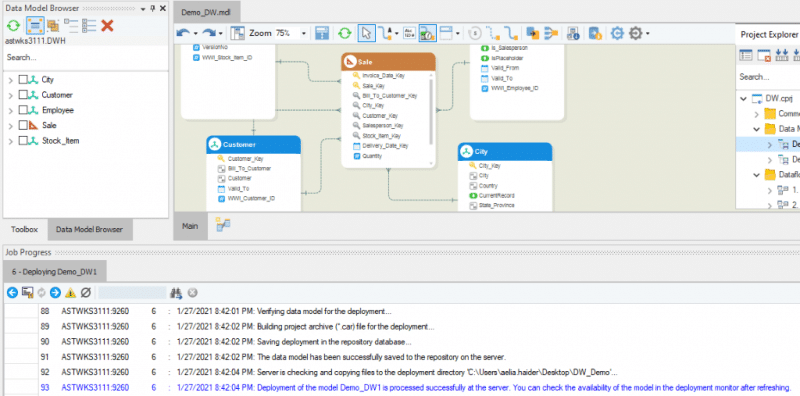

Astera is widely known for its products Astera Centerprise, Astera DW Builder, Astera API Management, Astera ReportMiner and Astera Data Stack. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tooling from Astera are strong in the following areas, among others:

Astera is widely known for its products Astera Centerprise, Astera DW Builder, Astera API Management, Astera ReportMiner and Astera Data Stack. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tooling from Astera are strong in the following areas, among others:

- API

- data warehouse

- data extraction

- ETL

- data management

- data quality

- data integration platform

- data transformation

Figure 7: Astera Centerprise

Figure 7: Astera Centerprise Figure 8: Astera DW Builder

Figure 8: Astera DW Builder4. CloverDX

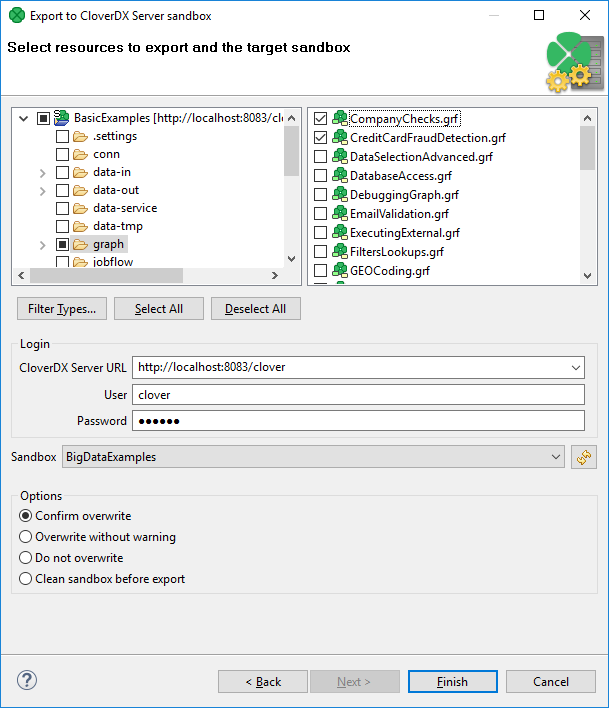

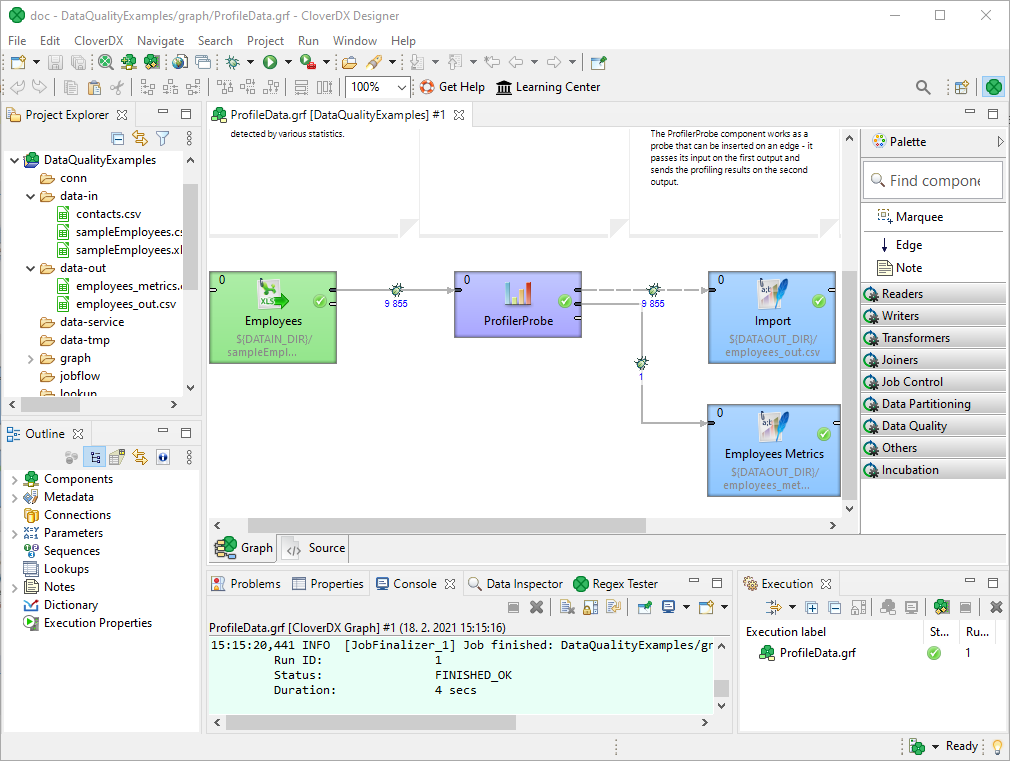

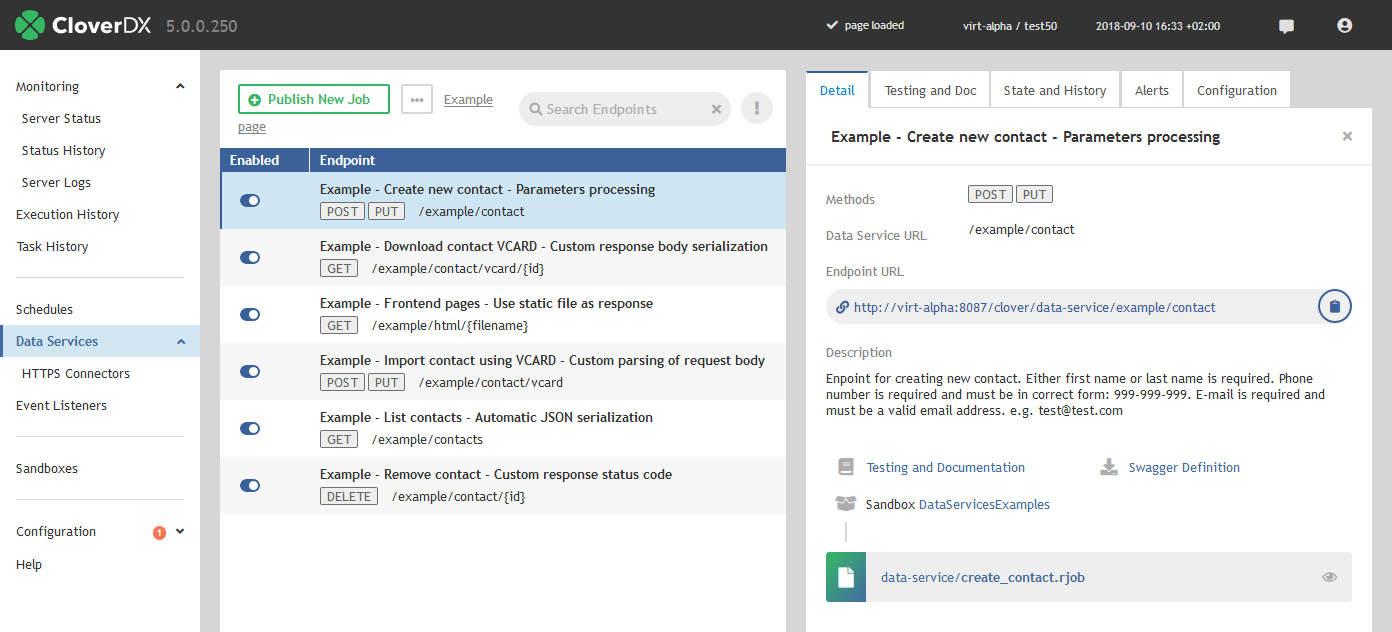

CloverDX is widely known for its products CloverDX Server, CloverDX Designer, CloverDX Data Integration, CloverDX Data Management and CloverDX Data Quality. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tools from CloverDX are strong in the following areas, among others:

CloverDX is widely known for its products CloverDX Server, CloverDX Designer, CloverDX Data Integration, CloverDX Data Management and CloverDX Data Quality. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tools from CloverDX are strong in the following areas, among others:

- data quality

- data management

- ETL

- data integration platform

- API

- data pipelines

- JAR

- JDBC

- SQL

Figure 10: CloverDX Server

Figure 10: CloverDX Server Figure 11: CloverDX Designer

Figure 11: CloverDX Designer Figure 12: CloverDX Data Integration

Figure 12: CloverDX Data Integration5. Elixir Tech

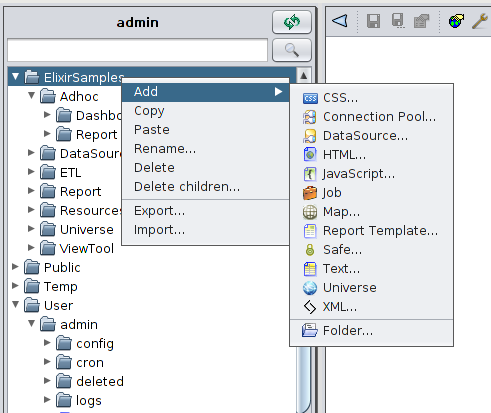

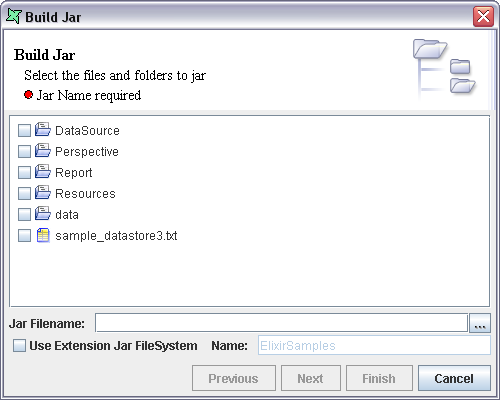

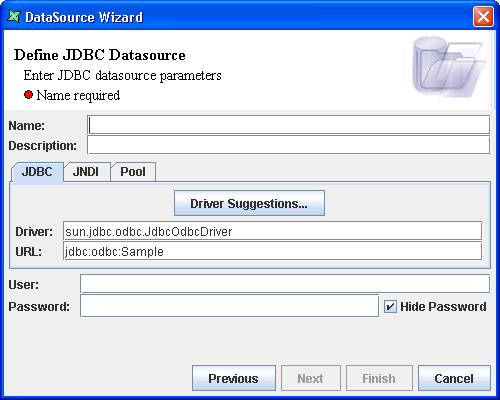

As far as we have been able to ascertain, Elixir Tech currently has only one primary product: Elixir Repertoire. Take a look at the screen shots below. The BI ETL tools from Elixir Tech are strong in the following areas, among others:

As far as we have been able to ascertain, Elixir Tech currently has only one primary product: Elixir Repertoire. Take a look at the screen shots below. The BI ETL tools from Elixir Tech are strong in the following areas, among others:

- ETL

- data integration

- interoperability

- SOA

- windows

- XML

- data sources

- API

- JDBC

Figure 13: Elixir Repertoire

Figure 13: Elixir Repertoire Figure 14: Elixir Repertoire

Figure 14: Elixir Repertoire Figure 15: Elixir Repertoire

Figure 15: Elixir Repertoire6. Fivetran

As far as we have been able to ascertain, Fivetran currently has only one primary product: Fivetran System. Take a look at the screen shots below. The ETL tools from Fivetran are strong in the following areas, among others:

- security

- data integration

- ETL

- AWS

7. Hitachi Vantara ETL & Data Integration

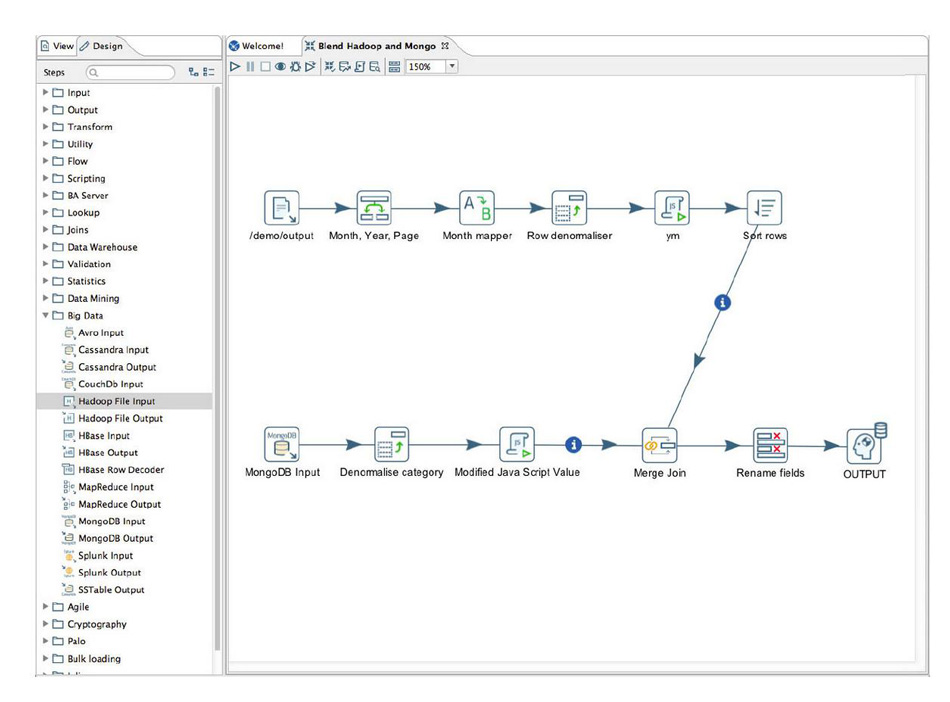

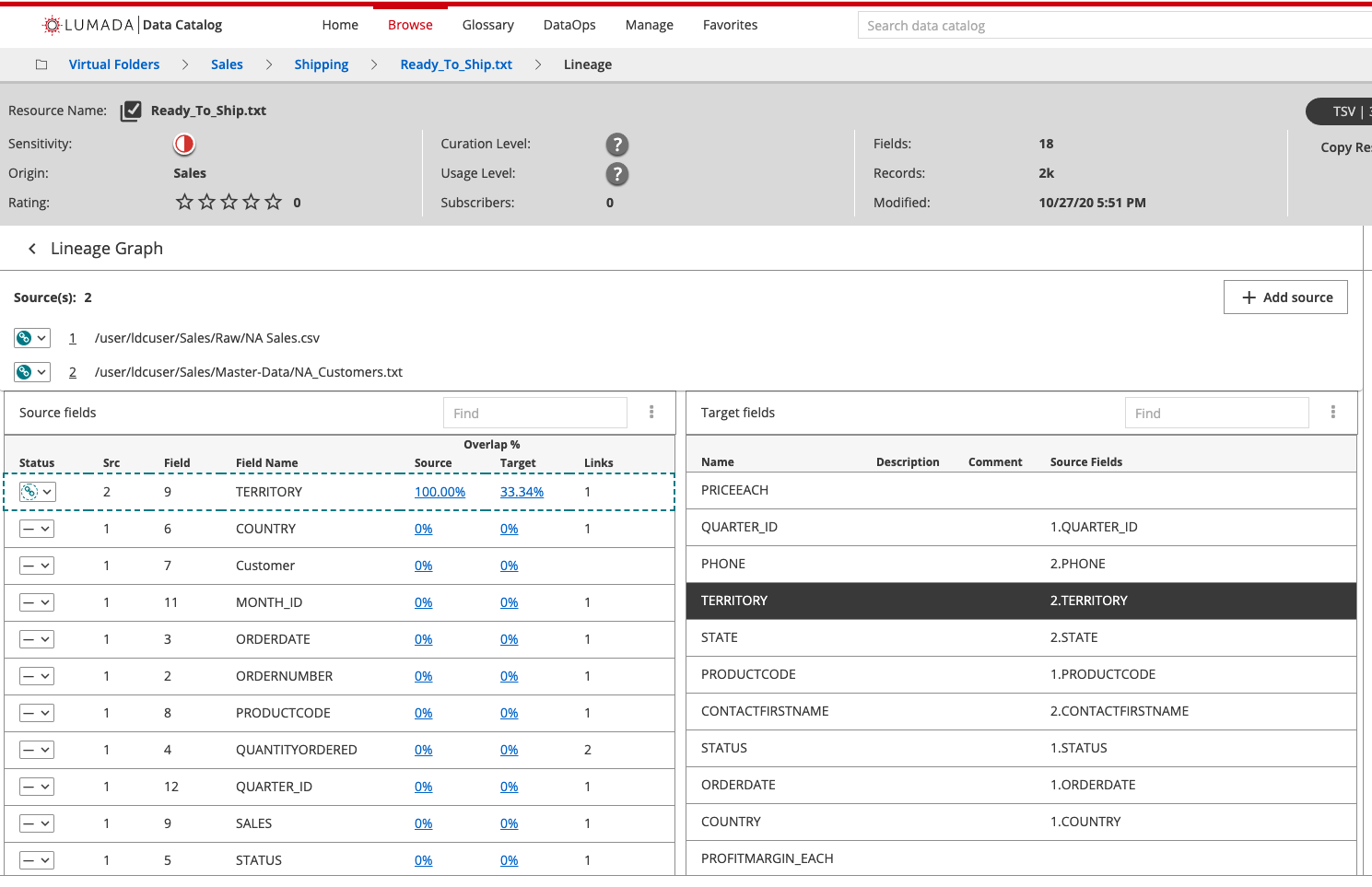

Hitachi Vantara is widely known for its products Pentaho Data Integration, Lumada Data Catalog, Lumada Edge Intelligence, Lumada Data Optimizer and Lumada Data Integration. Take a look at the images below. The ETL tooling from Hitachi Vantara are strong in the following areas, among others:

Hitachi Vantara is widely known for its products Pentaho Data Integration, Lumada Data Catalog, Lumada Edge Intelligence, Lumada Data Optimizer and Lumada Data Integration. Take a look at the images below. The ETL tooling from Hitachi Vantara are strong in the following areas, among others:

- data catalog

- data integration

- dataops

- data management

- hadoop

- metadata

- ETL

- big data

- operating system

Figure 16: Pentaho Data Integration

Figure 16: Pentaho Data Integration Figure 17: Lumada Data Catalog

Figure 17: Lumada Data Catalog Figure 18: Lumada Edge Intelligence

Figure 18: Lumada Edge Intelligence8. IBM ETL & Data Integration

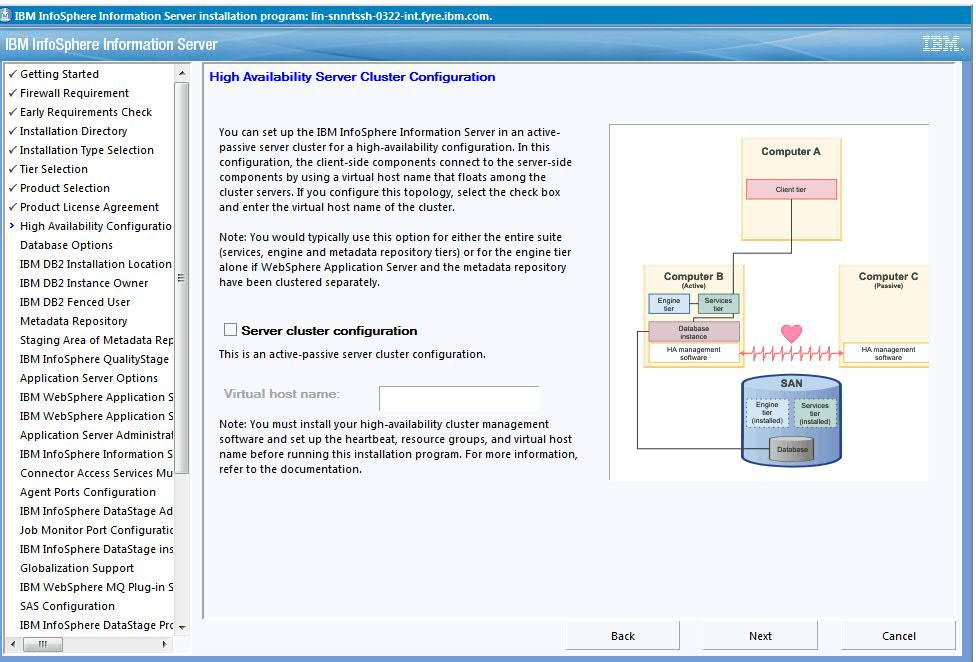

The data integration tools from the ETL & Data Integration vendor IBM focuses on the support of the following functionality:

The data integration tools from the ETL & Data Integration vendor IBM focuses on the support of the following functionality:

- data quality

- data integration

- data replication

- data management

- master data management

- CDC

- z/os

- ETL

- linux

IBM serves the market with the following products, among others: Tivoli, IBM Infosphere Information Server, Infosphere Information Analyzer and Q Replication (see the images below). We took a closer look at them.

Figure 20: IBM Infosphere Information Server

Figure 20: IBM Infosphere Information Server Figure 21: Infosphere Information Analyzer

Figure 21: Infosphere Information Analyzer9. Informatica

The most well-known products in the area of ETL & Data Integration of the company Informatica are Powerexchange, Informatica PowerCenter, Informatica Intelligent Cloud Services, Informatica Big Data and Informatica Data Quality. We analyzed and evaluated these ETL & Data Integration products in depth and meticulously. The BI ETL tools from Informatica can be characterized by good support on the following topics:

The most well-known products in the area of ETL & Data Integration of the company Informatica are Powerexchange, Informatica PowerCenter, Informatica Intelligent Cloud Services, Informatica Big Data and Informatica Data Quality. We analyzed and evaluated these ETL & Data Integration products in depth and meticulously. The BI ETL tools from Informatica can be characterized by good support on the following topics:

- data integration

- data quality

- data management

- data profiling

- data replication

- cloud data integration

- big data management

- metadata

- hadoop

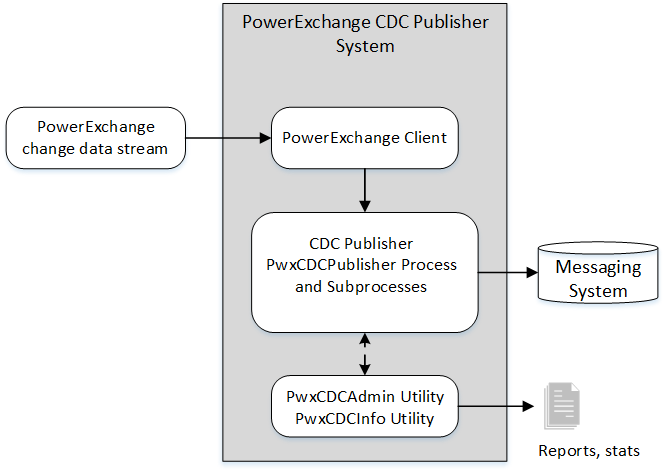

Figure 22: Powerexchange

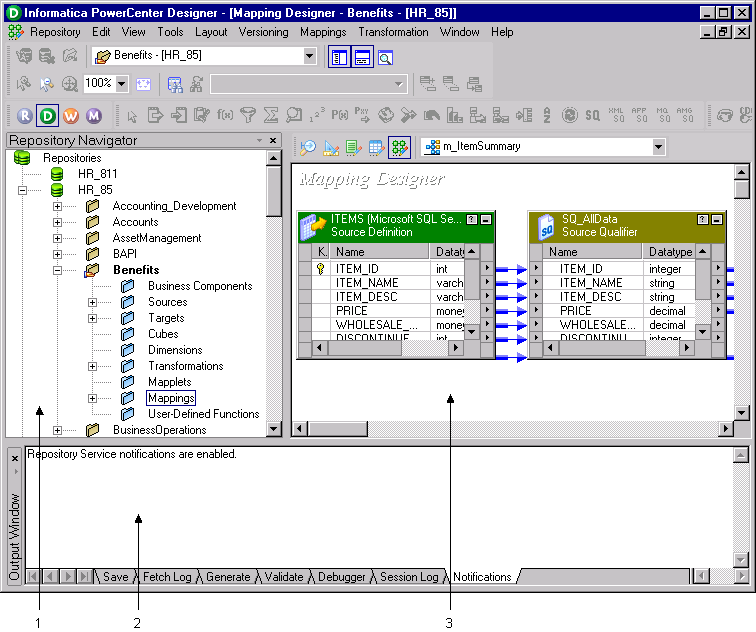

Figure 22: Powerexchange Figure 23: Informatica PowerCenter

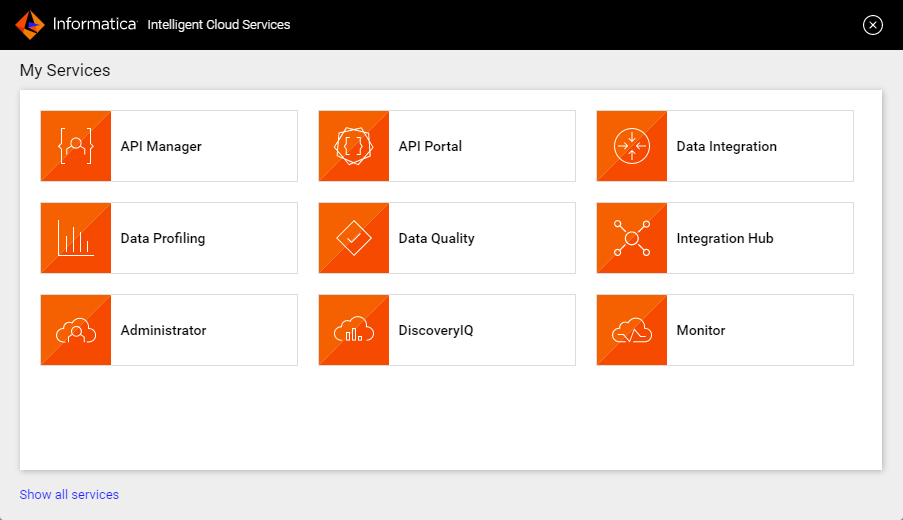

Figure 23: Informatica PowerCenter Figure 24: Informatica Intelligent Cloud Services

Figure 24: Informatica Intelligent Cloud Services10. Integrate.io (Xplenty)

As far as we have been able to ascertain, Integrate.io (Xplenty) currently has only one primary product: Xplenty Platform. Take a look at the screen shots below. The ETL software from Integrate.io (Xplenty) are strong in the following areas, among others:

As far as we have been able to ascertain, Integrate.io (Xplenty) currently has only one primary product: Xplenty Platform. Take a look at the screen shots below. The ETL software from Integrate.io (Xplenty) are strong in the following areas, among others:

- ETL

- API

- data pipelines

- data integration platform

- data sources

- ELT

- security

- rest API

- CDC

11. Microsoft ETL & Data Integration

Microsoft is widely known for its products Azure Data Factory, Microsoft Azure, Azure Data Lake, Azure Synapse Analytics and DQS. Take a look at the images below. The ETL tools from Microsoft are strong in the following areas, among others:

Microsoft is widely known for its products Azure Data Factory, Microsoft Azure, Azure Data Lake, Azure Synapse Analytics and DQS. Take a look at the images below. The ETL tools from Microsoft are strong in the following areas, among others:

- SQL

- sql server

- data warehouse

- data quality

- BLOB

- data catalog

- microsoft sql server

- windows

- security

- data governance

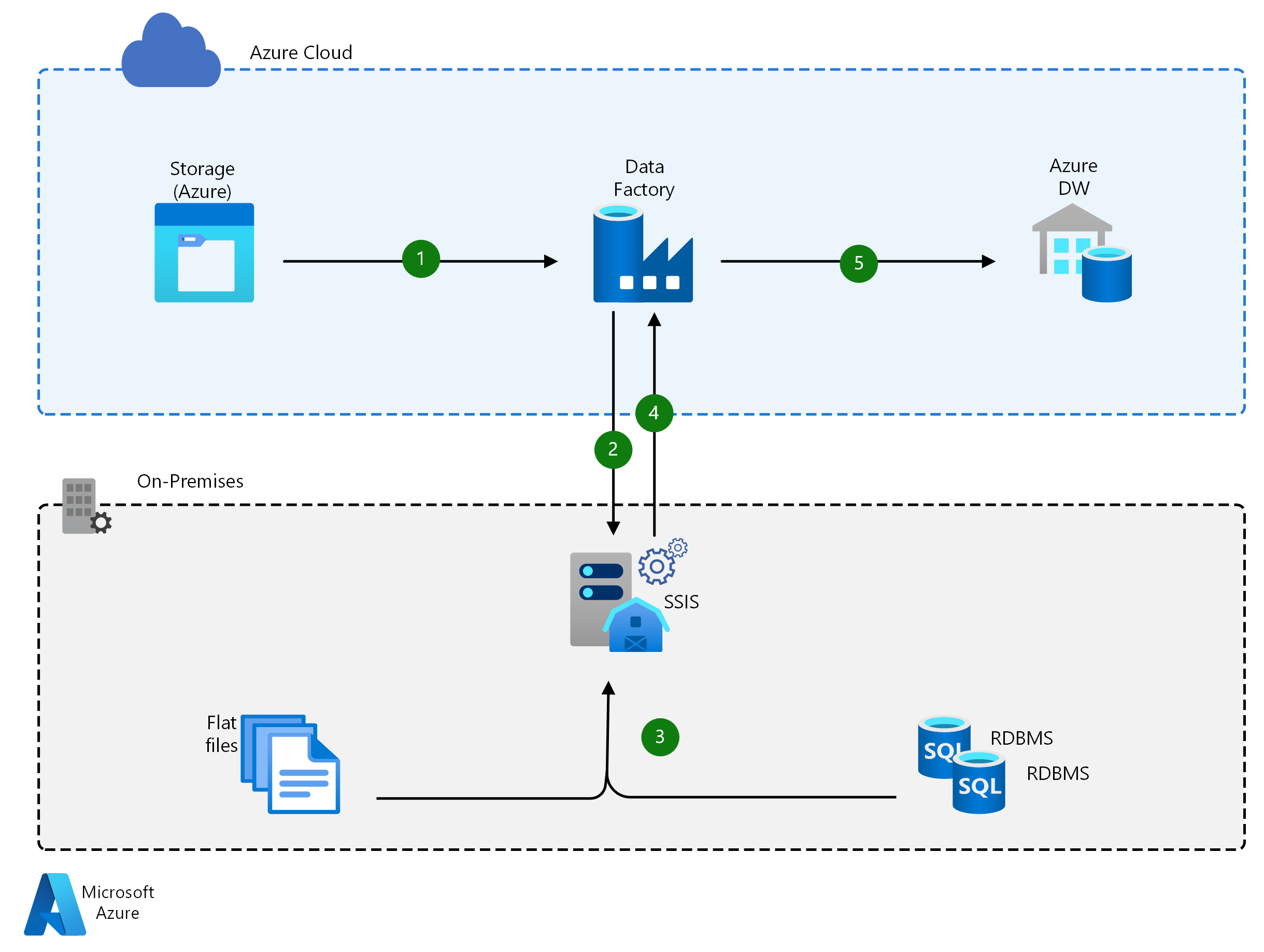

Figure 25: Azure Data Factory

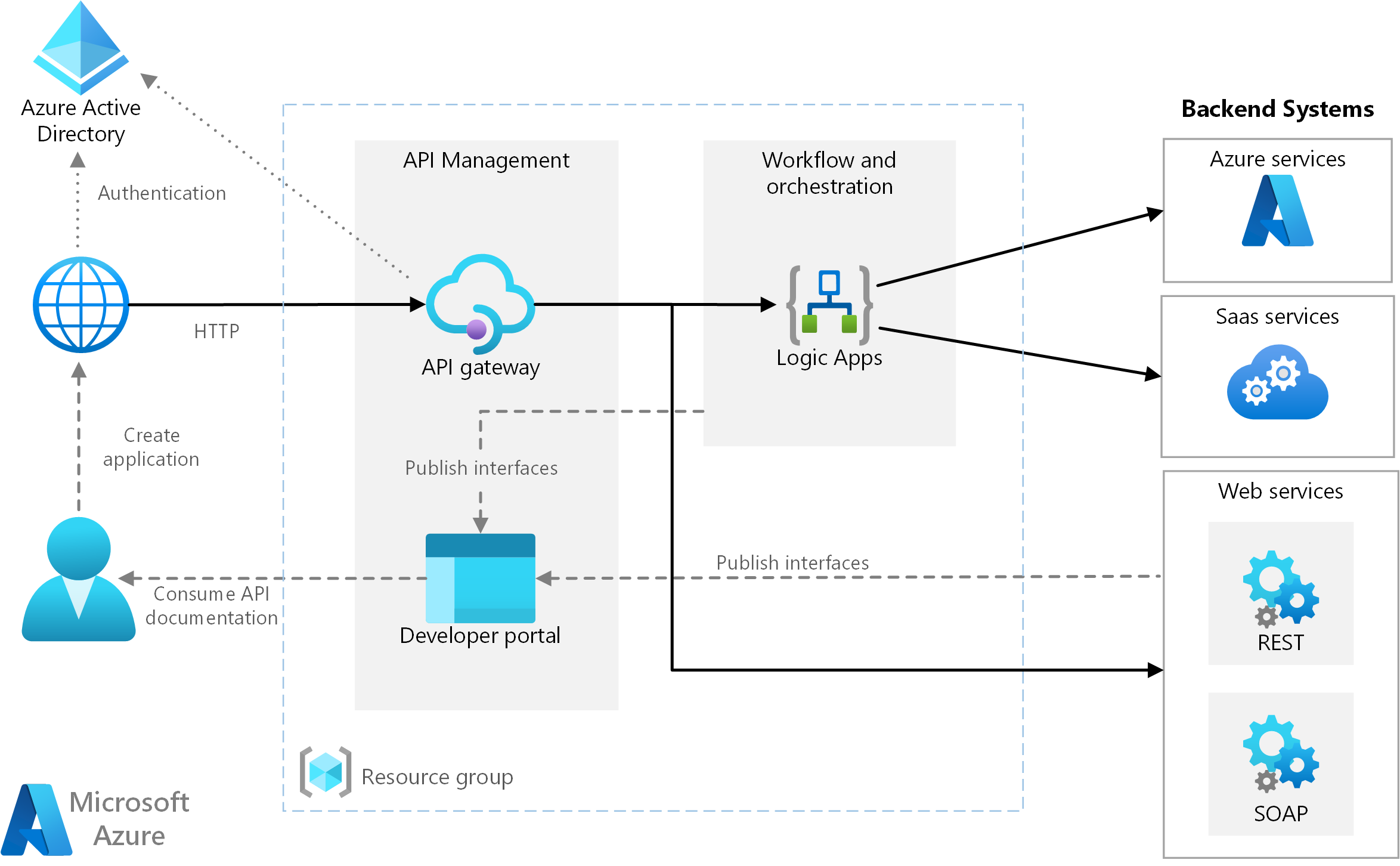

Figure 25: Azure Data Factory Figure 26: Microsoft Azure

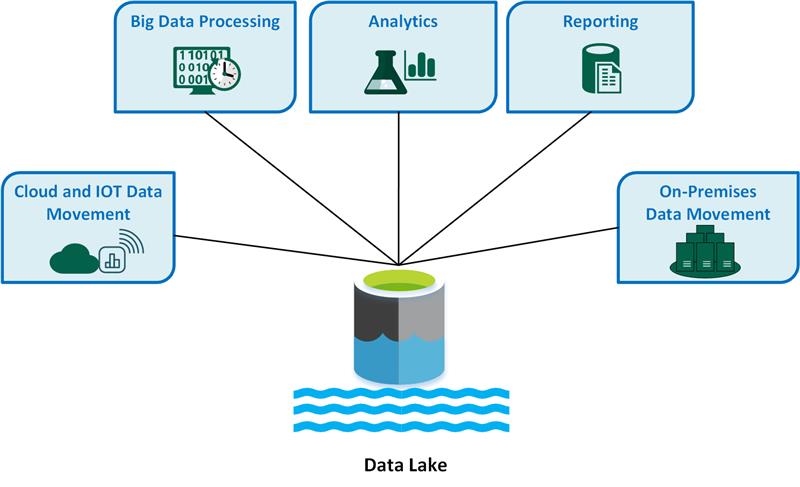

Figure 26: Microsoft Azure Figure 27: Azure Data Lake

Figure 27: Azure Data Lake12. OpenText

The data integration tools from the ETL & Data Integration vendor OpenText focuses on the support of the following functionality:

The data integration tools from the ETL & Data Integration vendor OpenText focuses on the support of the following functionality:

- big data analytics

- file transfer

- managed file transfer

- security

- AWS

- data integration

- data transformation

- data sources

- data mapping

OpenText serves the market with the following products, among others: OpenText Gupta, OpenText Business Network, OpenText Connect, OpenText Contivo and OpenText Big Data (see the images below). We took a closer look at them.

Figure 28: OpenText Gupta

Figure 28: OpenText Gupta Figure 29: OpenText Business Network

Figure 29: OpenText Business Network Figure 30: OpenText Connect

Figure 30: OpenText Connect13. Oracle ETL & Data Integration

Oracle is widely known for its products Oracle Data Integrator, Oracle Goldengate, Oracle Enterprise Data Quality, Oracle Warehouse Builder and Oracle Streams. Take a look at the images below. The ETL tools from Oracle are strong in the following areas, among others:

Oracle is widely known for its products Oracle Data Integrator, Oracle Goldengate, Oracle Enterprise Data Quality, Oracle Warehouse Builder and Oracle Streams. Take a look at the images below. The ETL tools from Oracle are strong in the following areas, among others:

- data quality

- data catalog

- data warehouse

- data integration platform

- metadata management

- autonomous data warehouse

- data management

- data profiling

Figure 31: Oracle Data Integrator

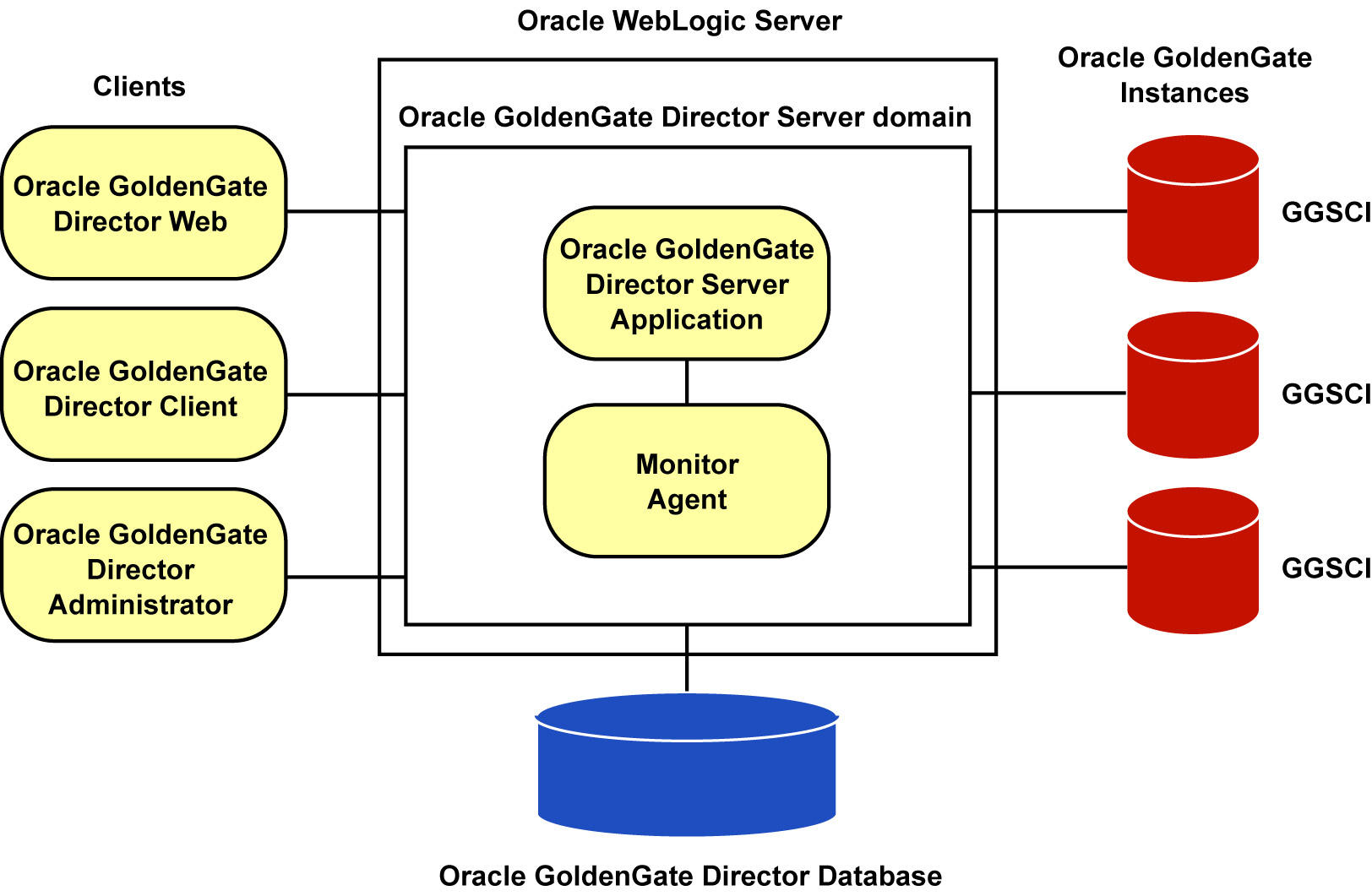

Figure 31: Oracle Data Integrator Figure 32: Oracle Goldengate

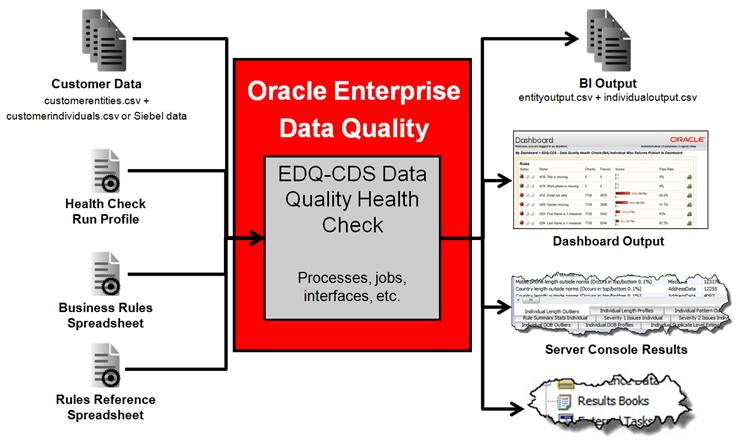

Figure 32: Oracle Goldengate Figure 33: Oracle Enterprise Data Quality

Figure 33: Oracle Enterprise Data Quality14. Precisely

Precisely is widely known for its products Precisely Connect and Precisely Data Integration. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tools from Precisely are strong in the following areas, among others:

Precisely is widely known for its products Precisely Connect and Precisely Data Integration. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tools from Precisely are strong in the following areas, among others:

- data integration

- ETL

- data integrity

- CDC

- big data

- data migration

- API

- AWS

- data pipelines

- data quality

Figure 34: Precisely Connect

Figure 34: Precisely Connect Figure 35: Precisely Connect

Figure 35: Precisely Connect Figure 36: Precisely Connect

Figure 36: Precisely Connect15. Qlik

Qlik is widely known for its products Qlik Replicate, Attunity, Qlik Compose, Qlik Data Integration and Qlik Catalog. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tooling from Qlik are strong in the following areas, among others:

Qlik is widely known for its products Qlik Replicate, Attunity, Qlik Compose, Qlik Data Integration and Qlik Catalog. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tooling from Qlik are strong in the following areas, among others:

- salesforce

- cloud data integration

- data sources

- API

- data warehouse

- data integration platform

- data pipelines

- real-time data

- data replication

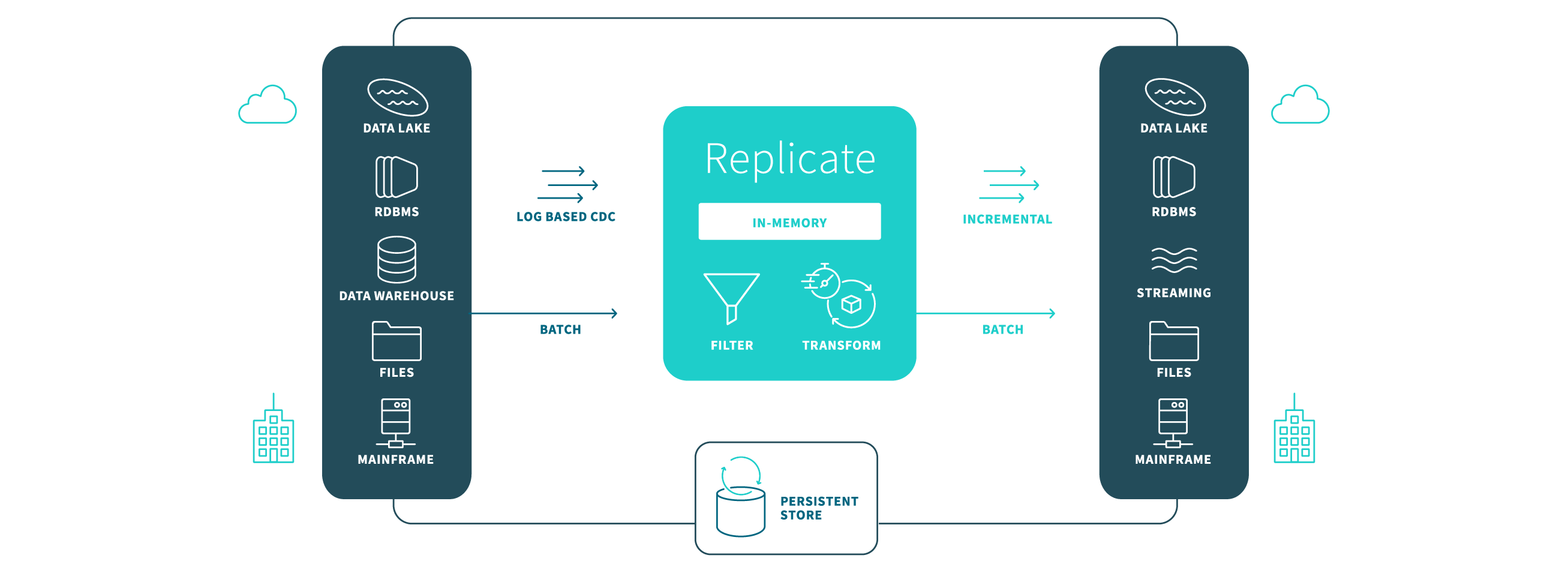

Figure 37: Qlik Replicate

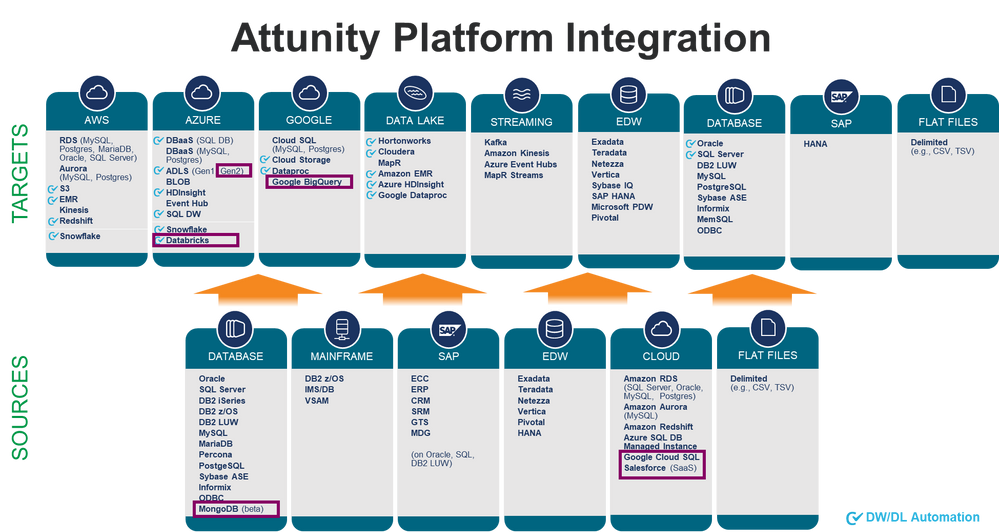

Figure 37: Qlik Replicate Figure 38: Attunity

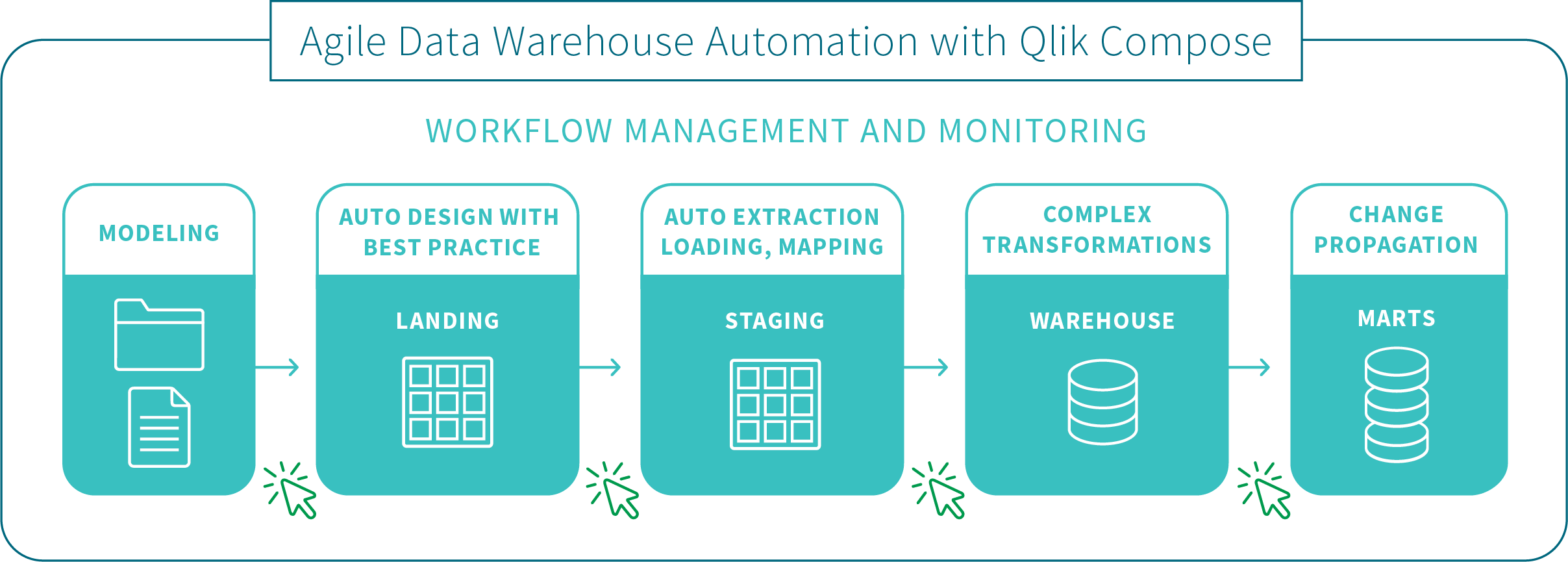

Figure 38: Attunity Figure 39: Qlik Compose

Figure 39: Qlik Compose16. SAP ETL & Data Integration

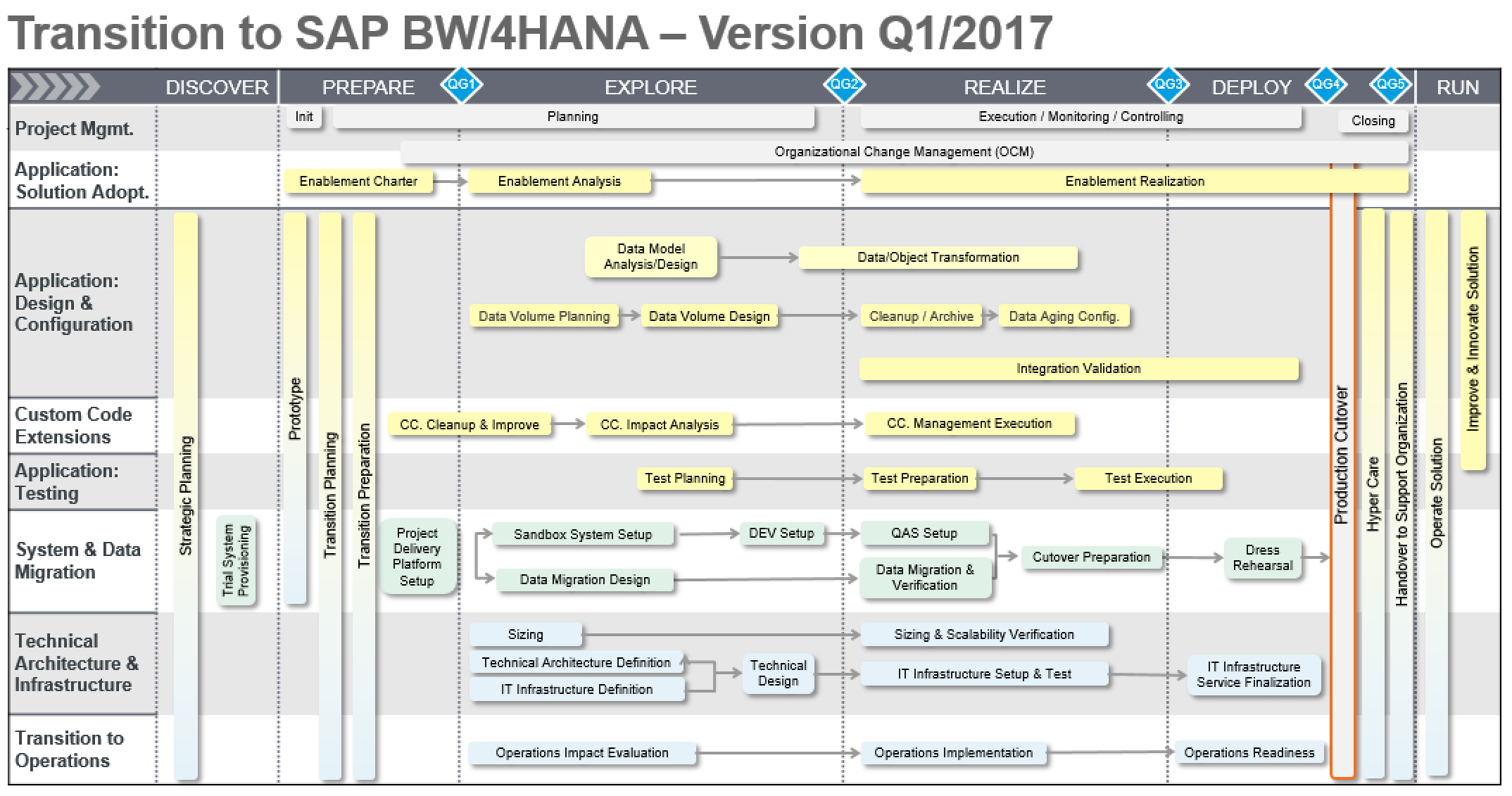

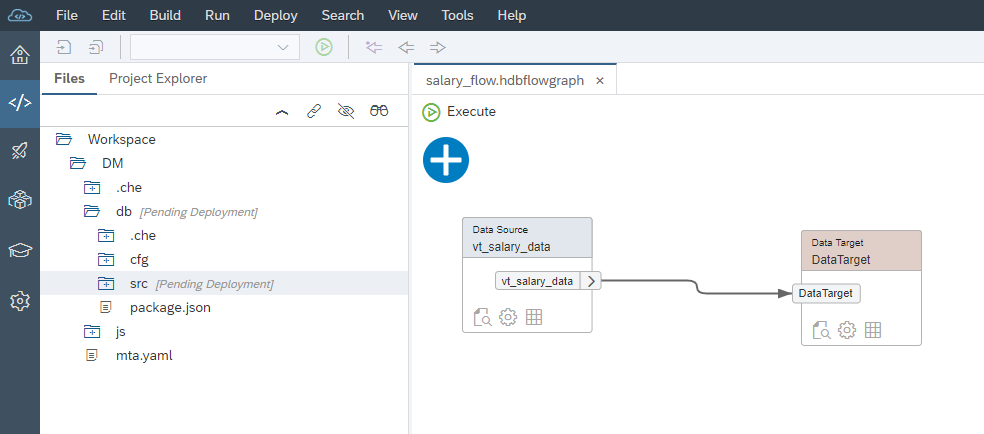

The most well-known products in the area of ETL & Data Integration of the company SAP are SAP BW, SAP HANA Smart Data Integration, SAP Data Services and SAP Master Data Governance. We analyzed and evaluated these ETL & Data Integration products in depth and meticulously. The ETL software from SAP can be characterized by good support on the following topics:

The most well-known products in the area of ETL & Data Integration of the company SAP are SAP BW, SAP HANA Smart Data Integration, SAP Data Services and SAP Master Data Governance. We analyzed and evaluated these ETL & Data Integration products in depth and meticulously. The ETL software from SAP can be characterized by good support on the following topics:

- data integration

- data governance

- data quality management

- master data integration

- sybase

- data management

- data warehouse

- master data management

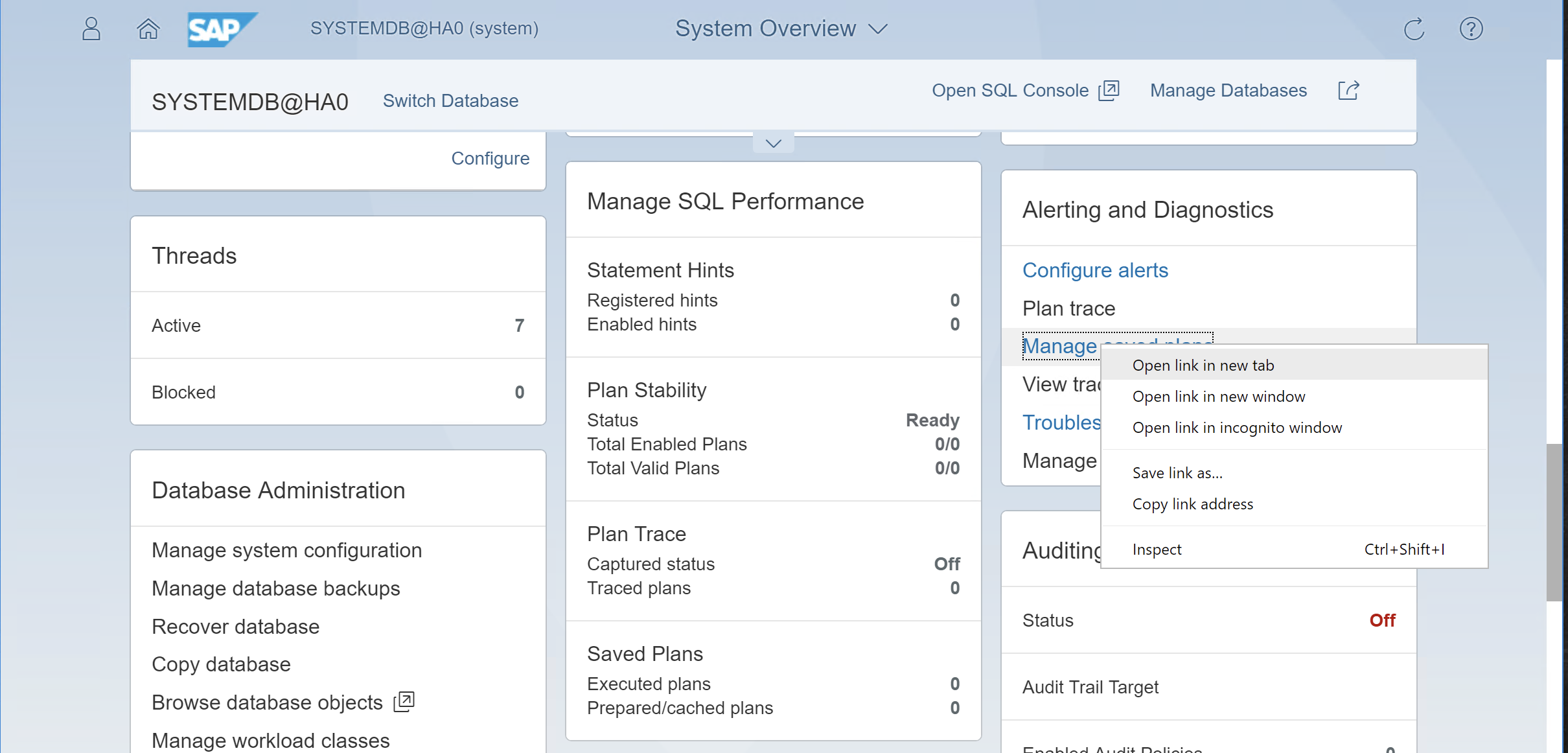

Figure 40: SAP HANA

Figure 40: SAP HANA Figure 41: SAP BW

Figure 41: SAP BW Figure 42: SAP HANA Smart Data Integration

Figure 42: SAP HANA Smart Data Integration17. SAS

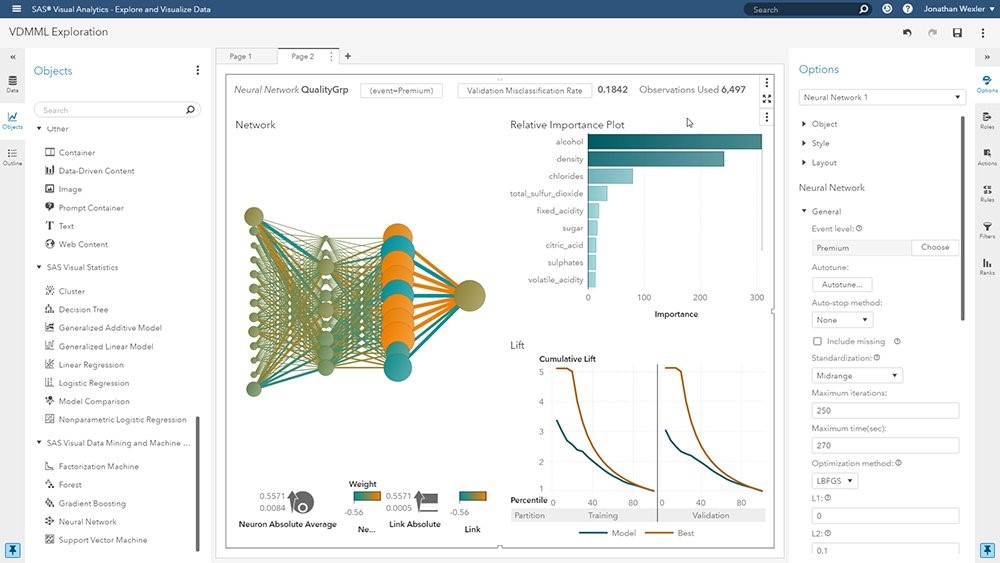

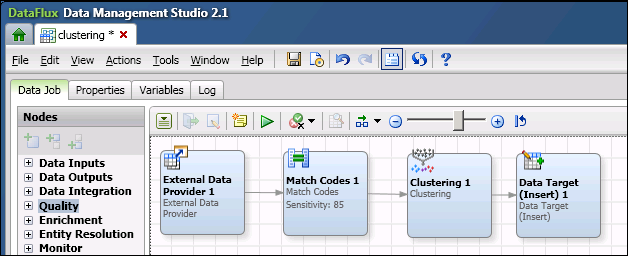

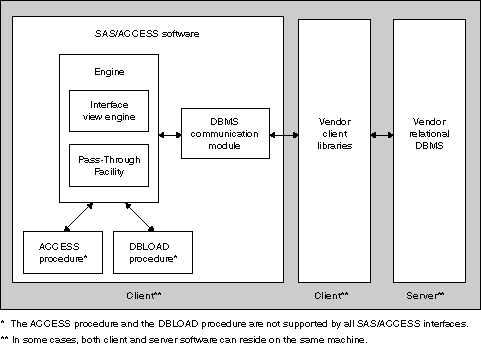

The BI ETL tools from the ETL & Data Integration vendor SAS focuses on the support of the following functionality:

The BI ETL tools from the ETL & Data Integration vendor SAS focuses on the support of the following functionality:

- data integration

- data quality

- metadata

- data management

- OLAP

- hadoop

- ETL

- data warehouse

- data sources

- teradata

SAS serves the market with the following products, among others: SAS Viya, SAS/ACCESS, SAS Data Integration Studio and SAS Data Quality (see the images below). We took a closer look at them.

Figure 43: SAS Viya

Figure 43: SAS Viya Figure 44: SAS Data Integration

Figure 44: SAS Data Integration Figure 45: SAS/ACCESS

Figure 45: SAS/ACCESS18. Sesame Software

The data integration tools from the ETL & Data Integration vendor Sesame Software focuses on the support of the following functionality:

The data integration tools from the ETL & Data Integration vendor Sesame Software focuses on the support of the following functionality:

- data management

- data integration

- data warehouse

- salesforce

- data sources

- security

- ETL

- data migration

- data protection

- data ingestion

Sesame Software serves the market with the following products, among others: Relational Junction and Sesame Software Relational Junction (see the images below). We took a closer look at them.

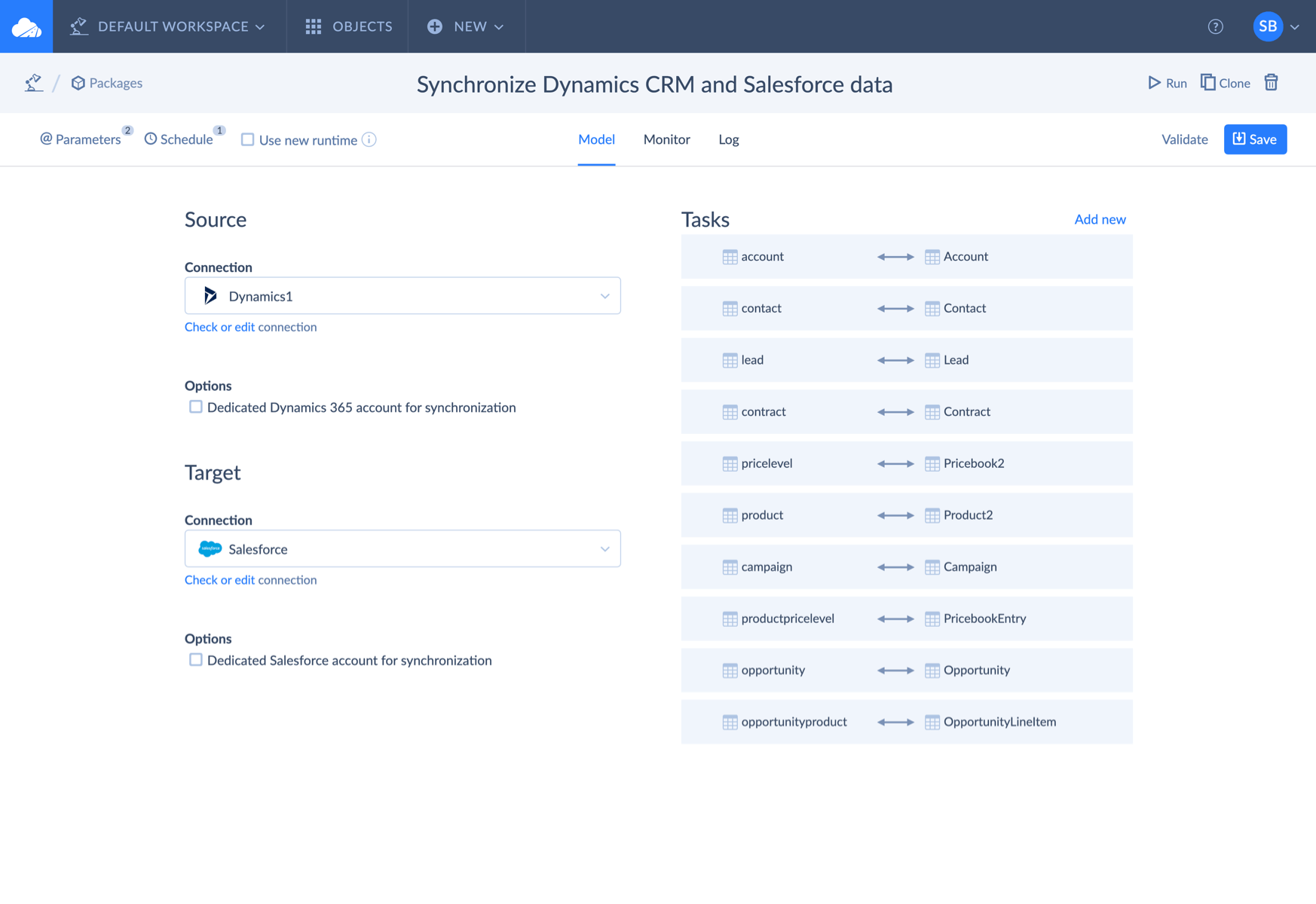

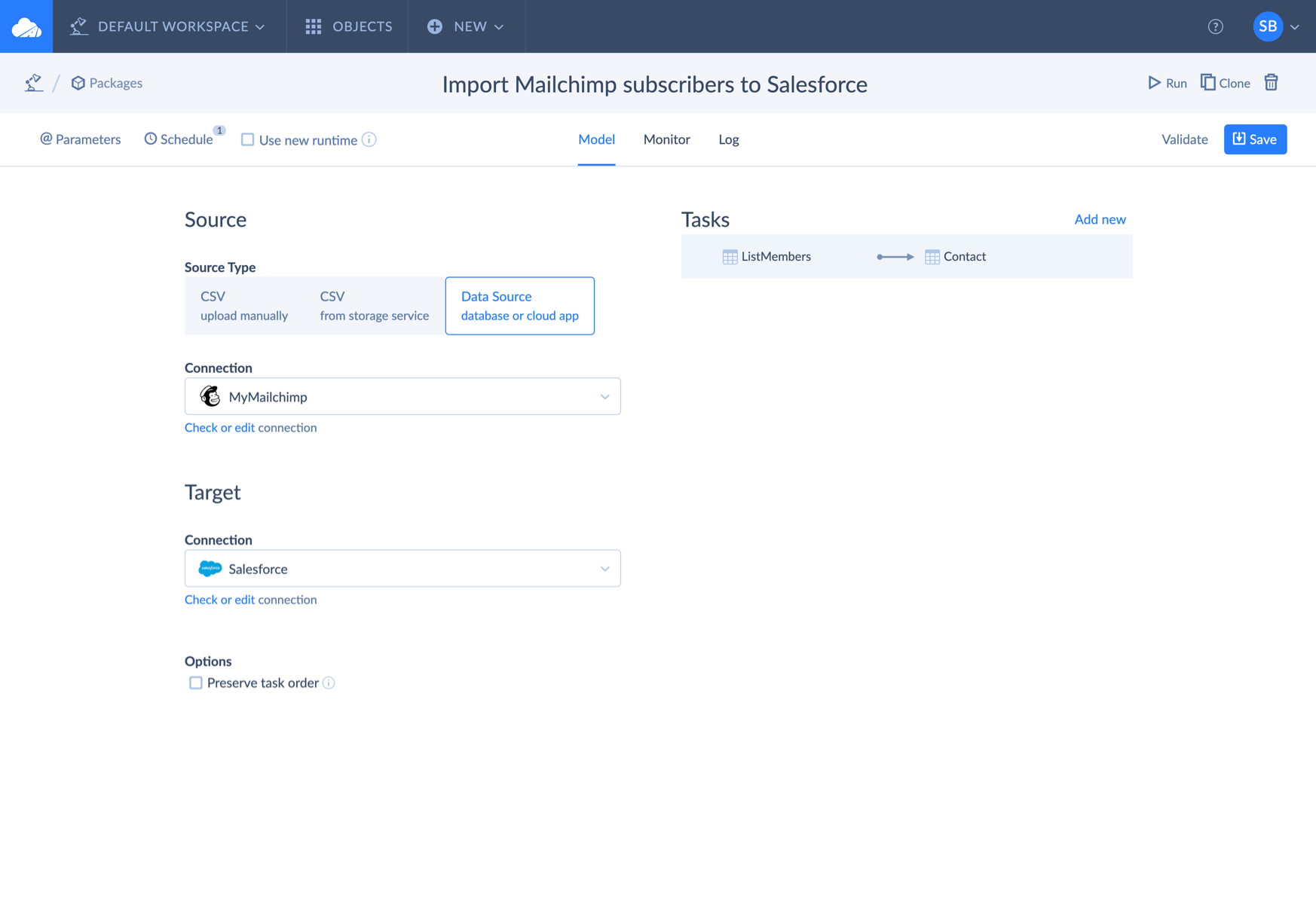

19. Skyvia

As far as we have been able to ascertain, Skyvia currently has only one primary product: Skyvia ETL. Take a look at the screen shots below. The BI ETL tools from Skyvia are strong in the following areas, among others:

As far as we have been able to ascertain, Skyvia currently has only one primary product: Skyvia ETL. Take a look at the screen shots below. The BI ETL tools from Skyvia are strong in the following areas, among others:

- ETL

- data integration

- CSV

- data sources

- AWS

- ELT

- data replication

- mysql

- salesforce

- amazon redshift

Figure 46: Skyvia ETL

Figure 46: Skyvia ETL Figure 47: Skyvia ETL

Figure 47: Skyvia ETL Figure 48: Skyvia ETL

Figure 48: Skyvia ETL20. SnapLogic

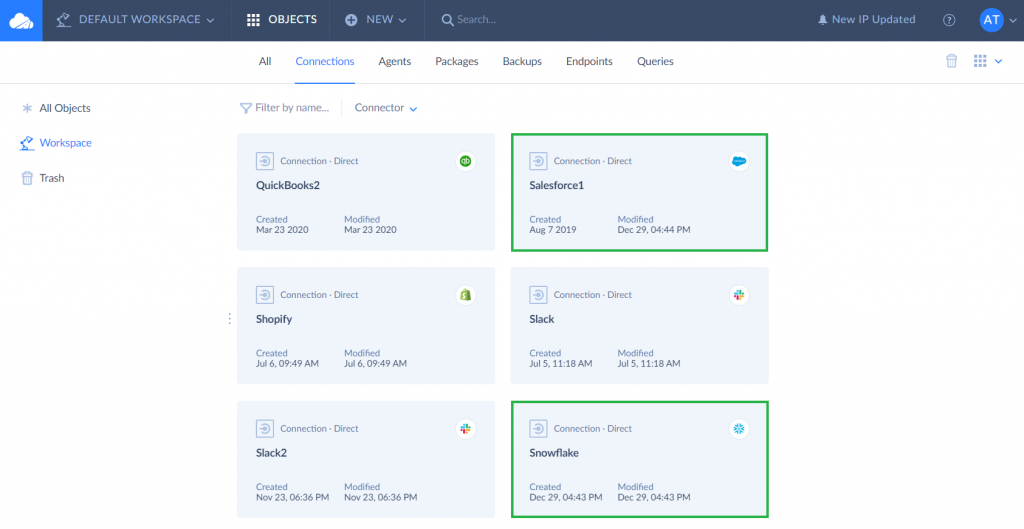

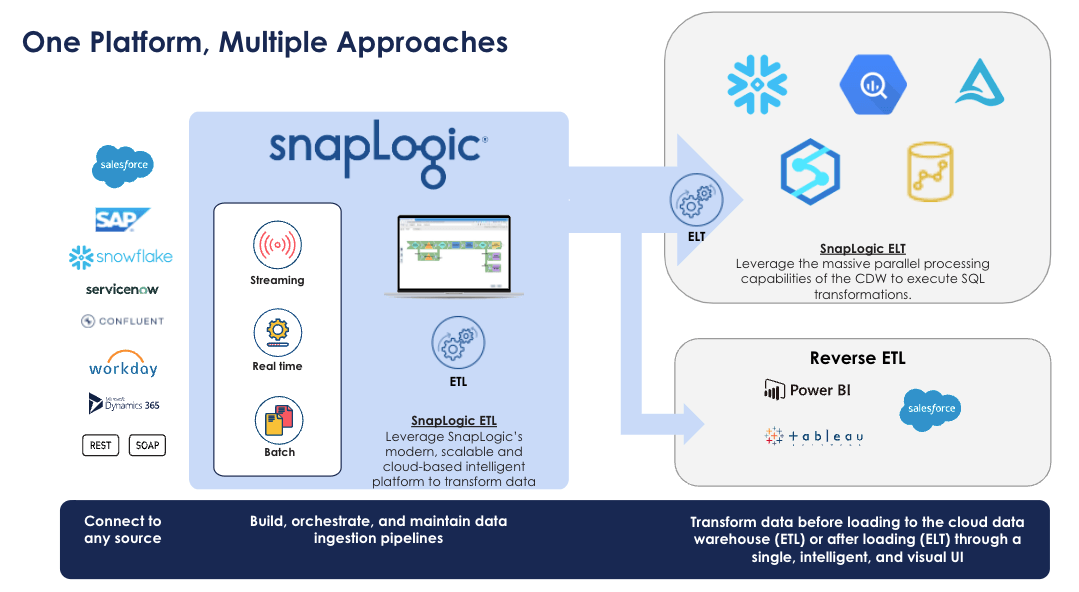

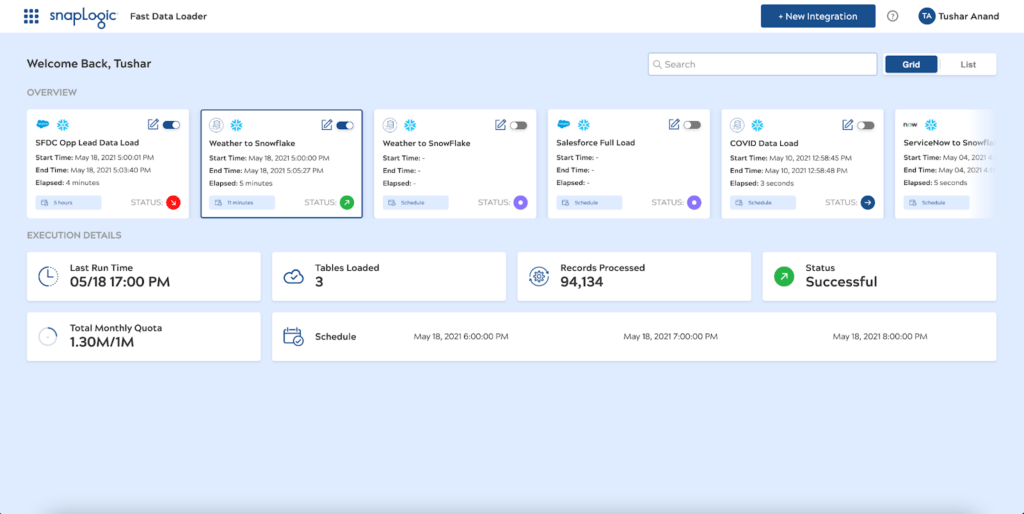

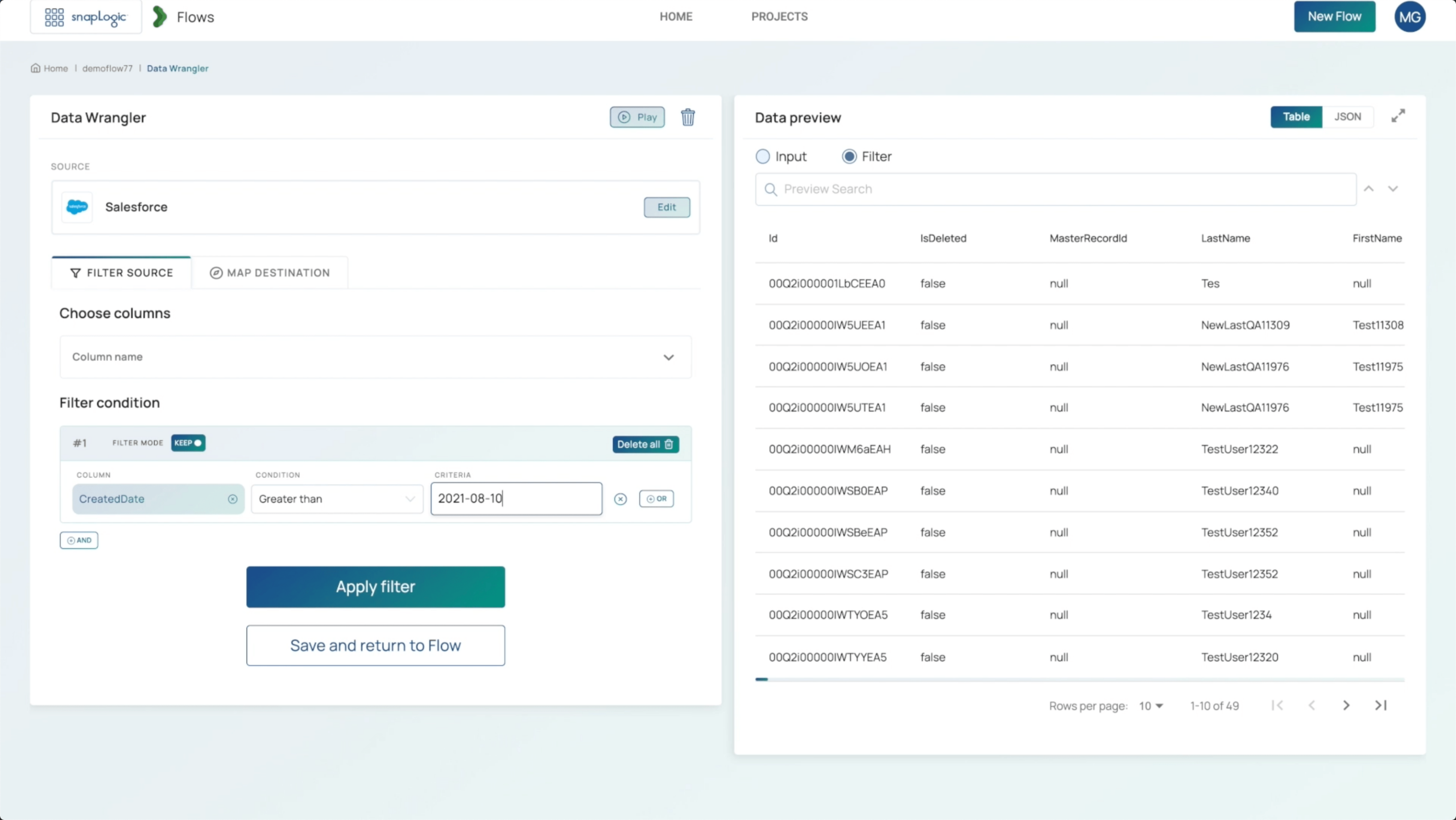

SnapLogic is widely known for its products SnapLogic Intelligent Integration, SnapLogic Fast Data Loader, SnapLogic Flows, SnapLogic Patterns Catalog and SnapLogic Data Catalog. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tooling from SnapLogic are strong in the following areas, among others:

SnapLogic is widely known for its products SnapLogic Intelligent Integration, SnapLogic Fast Data Loader, SnapLogic Flows, SnapLogic Patterns Catalog and SnapLogic Data Catalog. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tooling from SnapLogic are strong in the following areas, among others:

- data catalog

- metadata

- data warehouse

- data integration

- ETL

- data pipelines

- API

- salesforce

- AWS

- data sources

Figure 49: SnapLogic Intelligent Integration

Figure 49: SnapLogic Intelligent Integration Figure 50: SnapLogic Fast Data Loader

Figure 50: SnapLogic Fast Data Loader Figure 51: SnapLogic Flows

Figure 51: SnapLogic Flows21. Snowflake

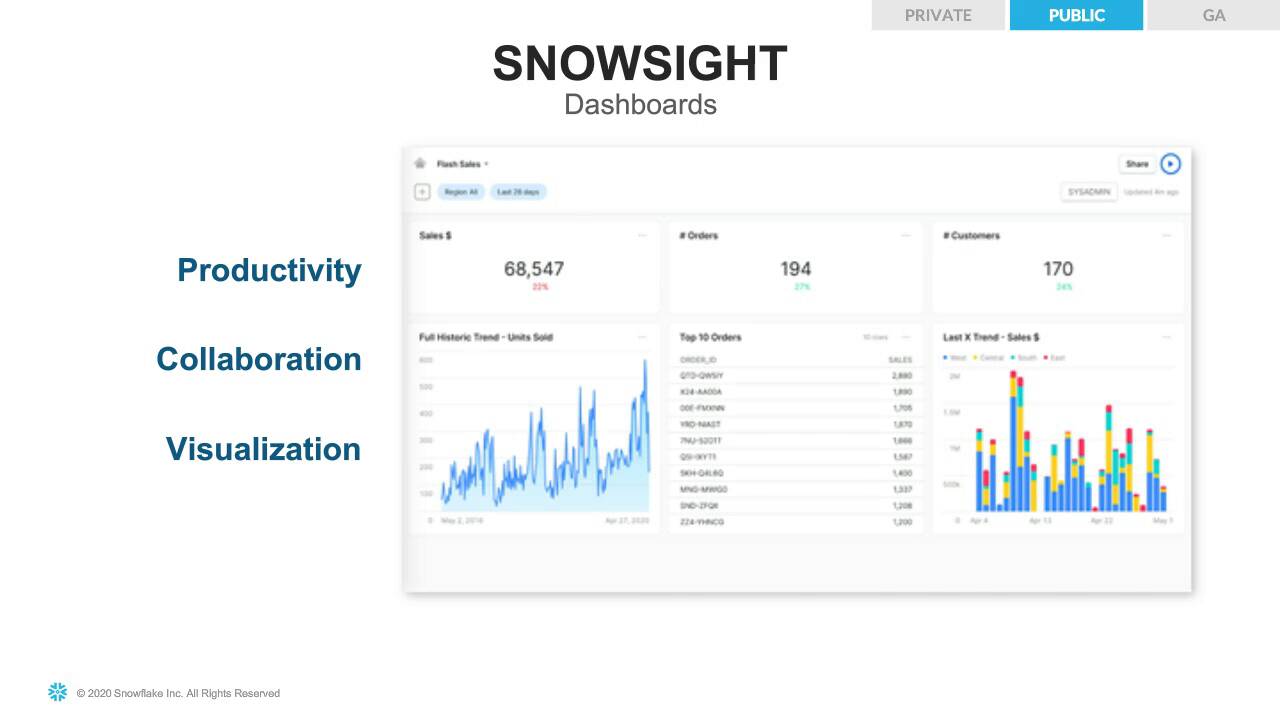

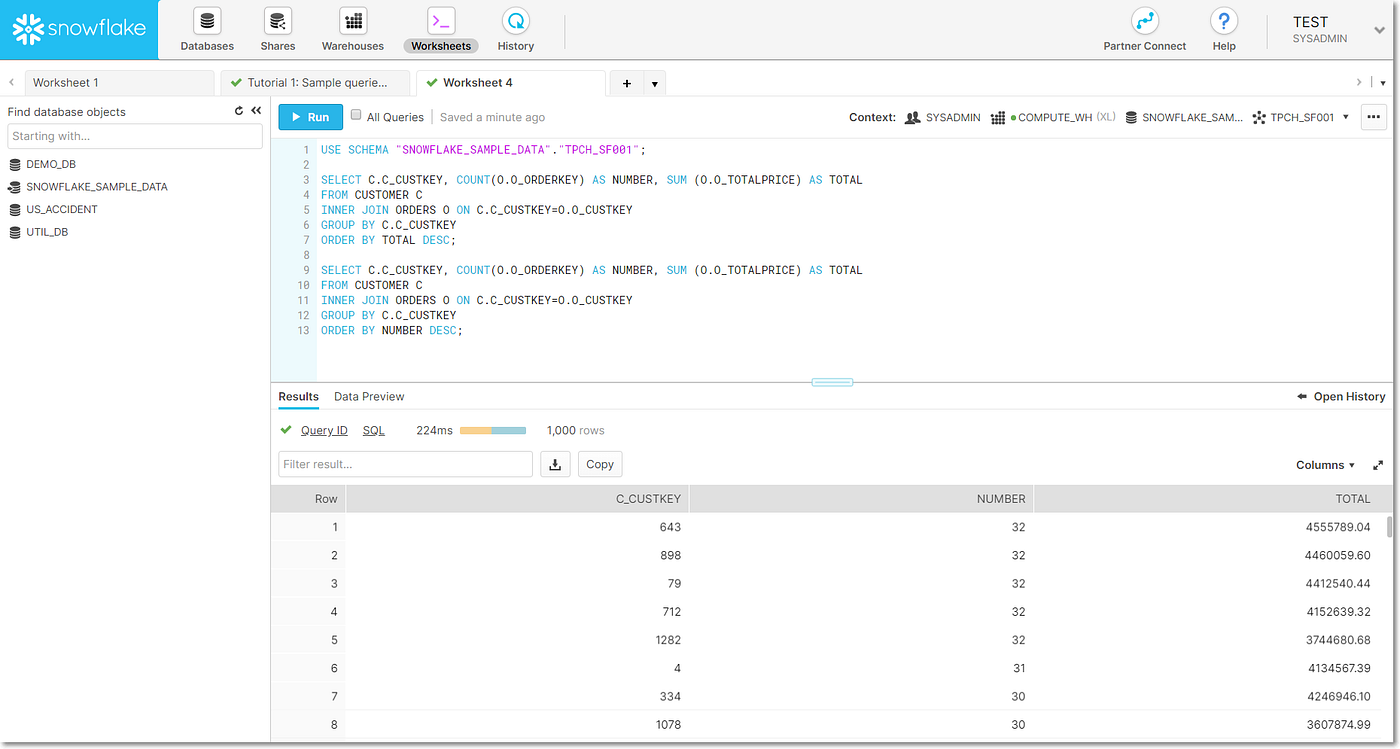

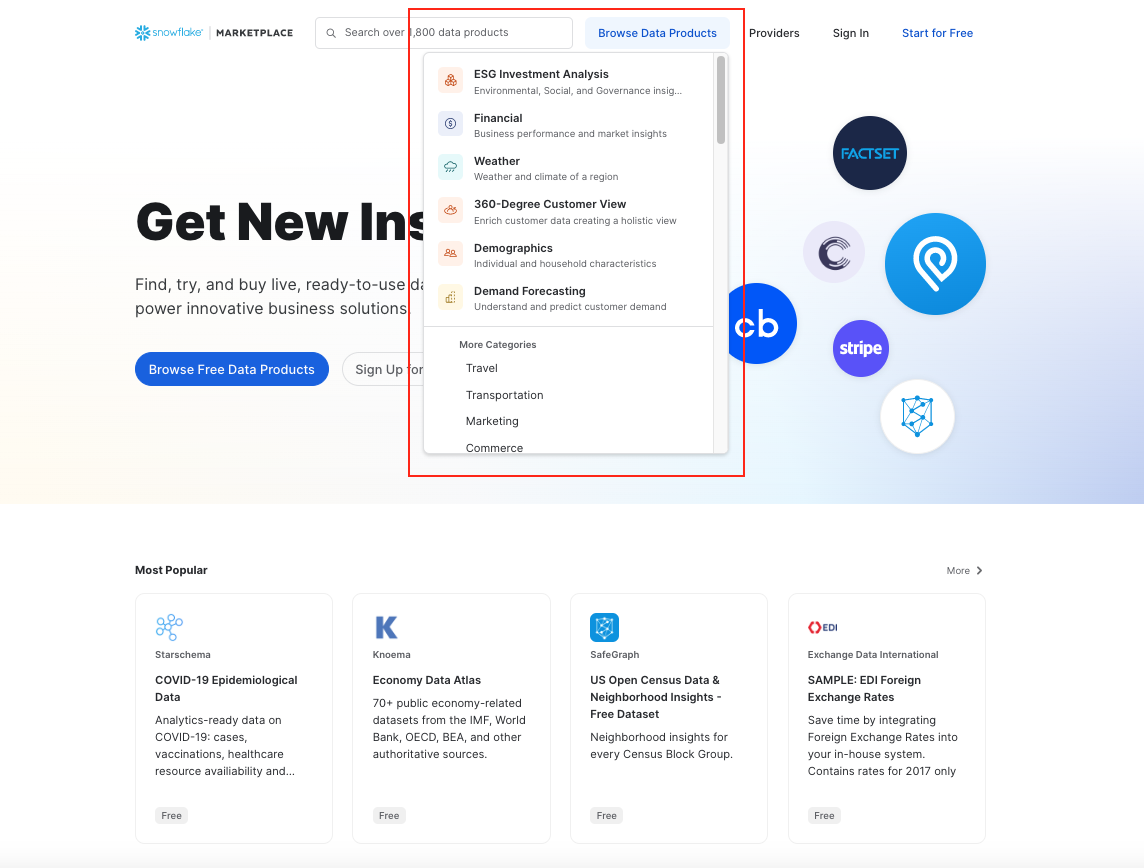

The most well-known products in the area of ETL & Data Integration of the company Snowflake are Snowflake Data Cloud, Snowflake Data Warehouse, Snowflake Marketplace, Snowflake Cloud Data Platform and Snowflake Data Platform. We analyzed and evaluated these ETL & Data Integration products in depth and meticulously. The ETL software from Snowflake can be characterized by good support on the following topics:

The most well-known products in the area of ETL & Data Integration of the company Snowflake are Snowflake Data Cloud, Snowflake Data Warehouse, Snowflake Marketplace, Snowflake Cloud Data Platform and Snowflake Data Platform. We analyzed and evaluated these ETL & Data Integration products in depth and meticulously. The ETL software from Snowflake can be characterized by good support on the following topics:

- data warehouse

- data lakes

- SQL

- AWS

- cloud platforms

- authentication

- data management

- big data

- ETL

- data marts

Figure 52: Snowflake Data Cloud

Figure 52: Snowflake Data Cloud Figure 53: Snowflake Data Warehouse

Figure 53: Snowflake Data Warehouse Figure 54: Snowflake Marketplace

Figure 54: Snowflake Marketplace22. Talend

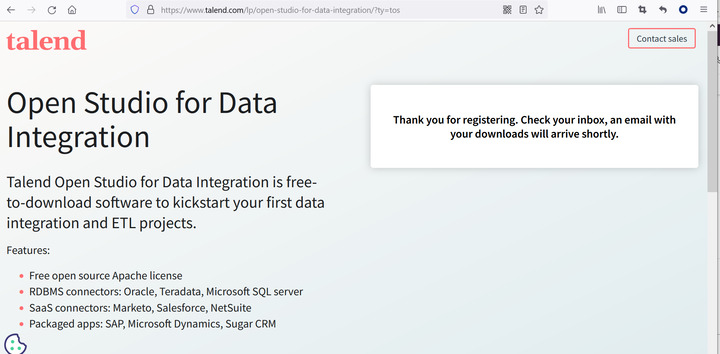

Talend is widely known for its products Talend Data Fabric, Talend Open Studio, Talend Data Catalog, Talend Data Integration and Talend Data Preparation. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tools from Talend are strong in the following areas, among others:

Talend is widely known for its products Talend Data Fabric, Talend Open Studio, Talend Data Catalog, Talend Data Integration and Talend Data Preparation. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tools from Talend are strong in the following areas, among others:

- data integration

- data quality

- ETL

- data management

- data catalog

- big data

- CDC

- AWS

- metadata management

Figure 55: Talend Data Fabric

Figure 55: Talend Data Fabric Figure 56: Talend Open Studio

Figure 56: Talend Open Studio Figure 57: Talend Data Catalog

Figure 57: Talend Data Catalog23. Tibco

Tibco is widely known for its products Omni-Gen, Tibco Webfocus, Tibco Data Virtualization, Tibco Cloud Integration and Tibco MDM. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tools from Tibco are strong in the following areas, among others:

Tibco is widely known for its products Omni-Gen, Tibco Webfocus, Tibco Data Virtualization, Tibco Cloud Integration and Tibco MDM. The company is 100% specialized in ETL & Data Integration. Take a look at the images below. The ETL tools from Tibco are strong in the following areas, among others:

- data virtualization

- data management

- master data management

- data quality

- data integration

- apache

- API

- data sources

- streaming data

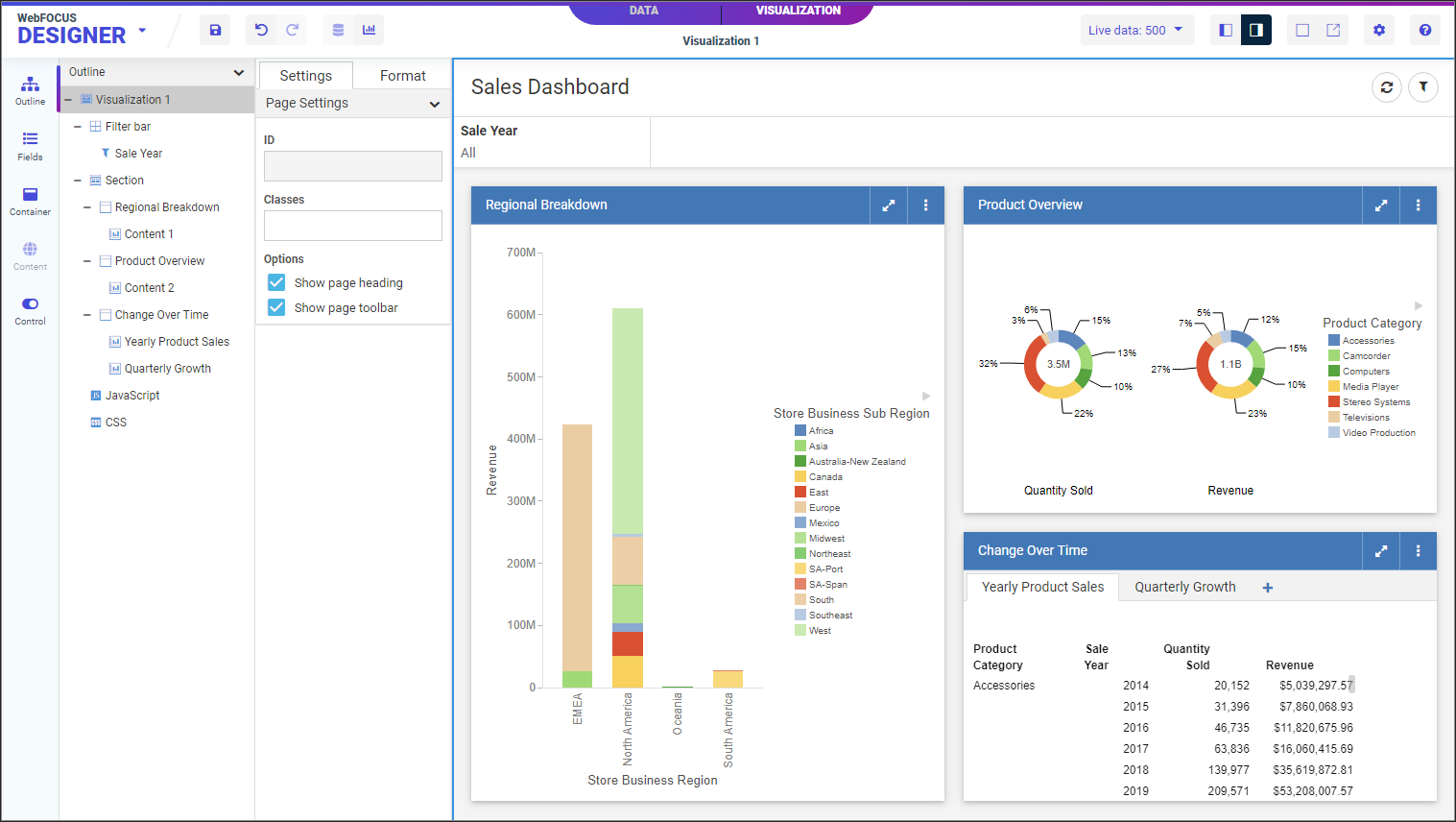

Figure 58: Tibco Webfocus

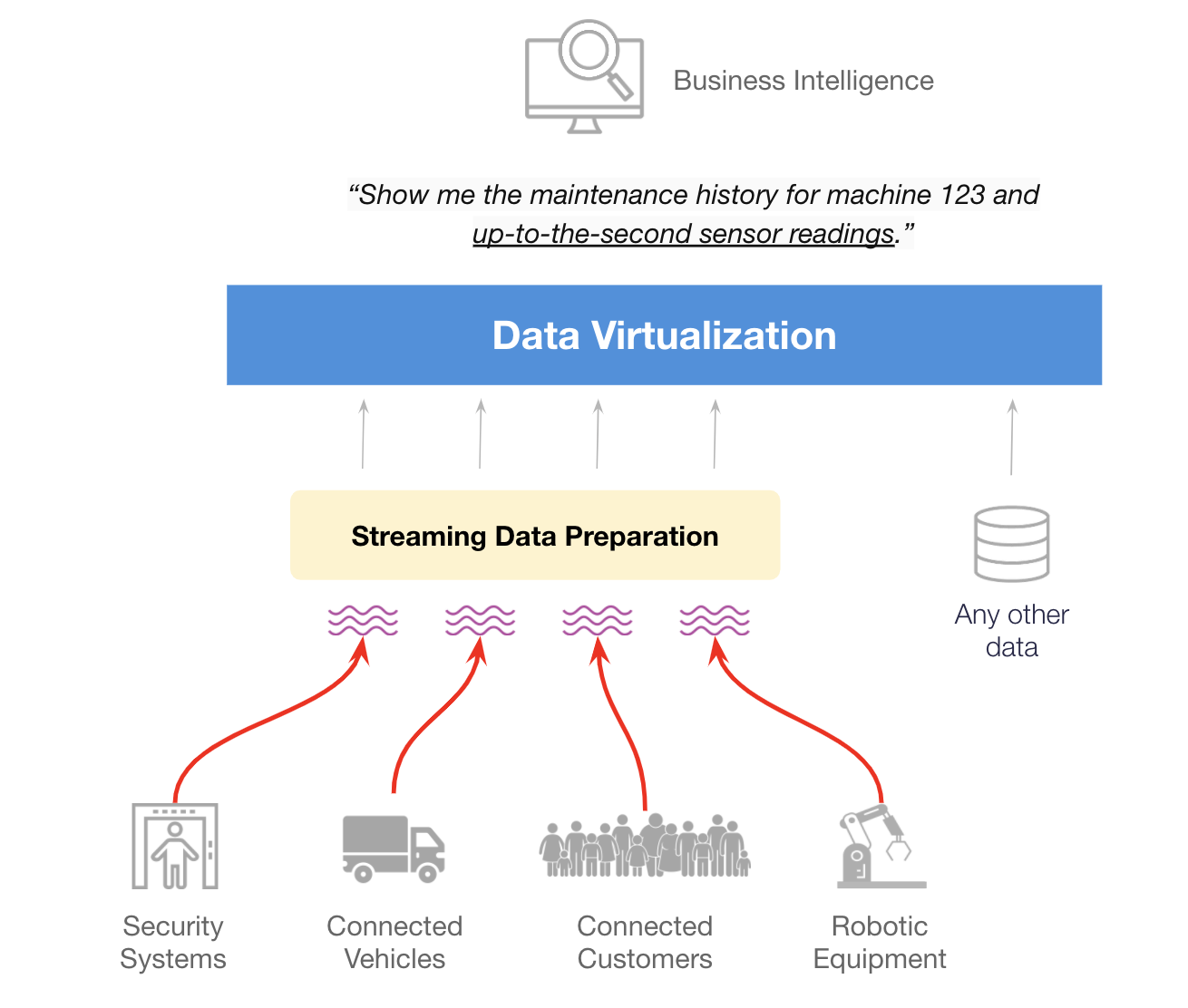

Figure 58: Tibco Webfocus Figure 59: Tibco Data Virtualization

Figure 59: Tibco Data Virtualization Figure 60: Tibco Cloud Integration

Figure 60: Tibco Cloud Integration

ETL tools and the data warehouse

Today, the top ETL tools in the market have vastly expanded their functionality beyond data warehousing and ETL. They now contain extended functionalities for data profiling, data cleansing, Enterprise Application Integration (EAI), Big Data processing, data governance, and master data management. Learn more about the top ETL tools in our 100% vendor-neutral guide, or discover the 9 reasons why you should build a data warehouse.

The ETL & Data Integration Guide 2024  Whatever ETL tool you need, never go by gut instinct, but always base your choice on objective data. In our ETL & Data Integration Guide™ 2024 you will find all major ETL software you can compare on more than 250 aspects. In addition, this guide includes a unique, online mini-course on ETL & Data Integration, allowing you to quickly grow into your role as a data integration specialist.

Whatever ETL tool you need, never go by gut instinct, but always base your choice on objective data. In our ETL & Data Integration Guide™ 2024 you will find all major ETL software you can compare on more than 250 aspects. In addition, this guide includes a unique, online mini-course on ETL & Data Integration, allowing you to quickly grow into your role as a data integration specialist.

ETL is not enough, you need BI software too

ETL and data integration software are primarily meant to perform the extraction, transformation, and loading of data. Once the data is available, for example in a data warehouse or OLAP cube, Business Intelligence software is commonly used to analyze and visualize the data. This type of software also provides reporting, data discovery, data mining, and dashboarding functionality. Passionned Group also follows the Business Intelligence market closely. Learn more about our research on Business Intelligence software.

What if your favorite ETL tool is not on this list?

If you think that a certain ETL tool is missing from this list and should be included, please fill out the request form and we’ll look into it straight away.

List of Data Integration tools

This is an overview of all the important ETL vendors on the market. The vendors provide solutions to perform data integration, data migration, data management, data profiling, and ETL. Some of the vendors also offer business intelligence software. Click on the name of the vendor/tool to learn more.

- Actian ETL & Data Integration

- Adeptia ETL & Data Integration

- CloverDX ETL & Data Integration

- Elixir Tech ETL & Data Integration

- ElixirData ETL & Data Integration

- Fivetran ETL & Data Integration

- Hitachi Vantara ETL & Data Integration

- IBM ETL & Data Integration

- Informatica ETL & Data Integration

- Integrate.io ETL & Data Integration

- Microsoft ETL & Data Integration

- OpenText & Data Integration

- Oracle ETL & Data Integration

- Precisely ETL & Data Integration

- Qlik ETL & Data Integration

- SAP ETL & Data Integration

- SAS ETL & Data Integration

- Sesame Software ETL & Data Integration

- Skyvia ETL & Data Integration

- SnapLogic ETL & Data Integration

- Talend ETL & Data Integration

- Tibco ETL & Data Integration

How to compare these vendors?

In our 100% vendor independent ETL & Data Integration Guide 2024 we compare these ETL vendors as well as their solutions on 90 key criteria that are important for selecting the right software for your organization. Do you need a solution that runs on Unix? Or Linux? Do you need a very user-friendly ETL tool? Is Data Quality important for your organization? Our guide provide all the answers to the important questions you’ll have when planning to purchase an ETL tool or data integration solution.

Why get an ETL tool?

A decade ago the vast majority of data warehouse systems were handcrafted, but the market for ETL software has steadily grown and the majority of practitioners now use ETL tools in place of hand-coded systems. Does it make sense to hand-code (SQL) a data warehouse today, or is an ETL tool a better choice?

What are the 7 biggest benefits of using ETL tools?

We now generally recommend using an ETL tool, but a custom-built approach can still make sense, especially when it is model-driven. This publication summarizes the seven biggest benefits of ETL tools and offers guidance on making the right choice for your situation.

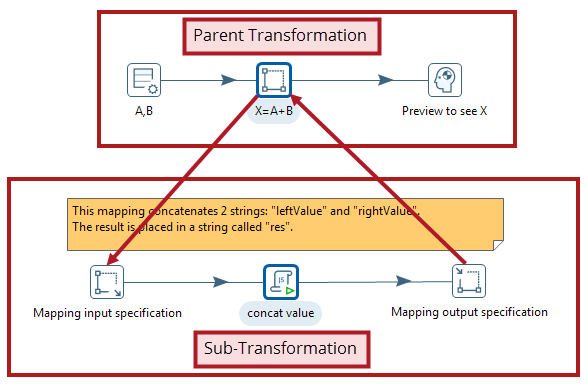

1. Visual flow

The single greatest advantage of an ETL tool is that it provides a visual flow of the system’s logic (if the tool is flow-based). Each ETL tool presents these flows differently, but even the least appealing of these ETL tools compare favorably to custom systems consisting of plain SQL, stored procedures, system scripts, and perhaps a handful of other technologies.

2. Structured system design

ETL tools are designed for the specific problem of data integration: populating a data warehouse or integrating data from multiple sources, or even just moving the data. With maintainability and extensibility in mind, they provide, in many cases, a metadata-driven structure to the developers. This is a particularly big advantage for teams building their first data warehouse.

3. Operational resilience

Many of the home-grown data warehouses we evaluated are rather fragile: they have many emergent operational problems. ETL tools provide functionality and standards for operating and monitoring the system in production. It’s certainly possible to design and build a well-instrumented, hand-coded ETL application. Nonetheless, it’s easier for a data warehouse/business intelligence team to build on the features of an ETL tool to build a resilient ETL system.

4. Data-lineage and impact analysis

We would like to be able to right-click on a number in a report and see exactly how it was calculated, where the data was stored in the data warehouse, how it was transformed, when the data was most recently refreshed, and from what source system(s) the numbers were extracted. Impact analysis is the flip side of lineage: we’d like to look at a table or column in the source system and know which ETL procedures, tables, cubes, and user reports might be affected if a structural change is needed. In the absence of ETL standards that hand-coded systems could conform to, we must rely on ETL vendors to supply this functionality — though, unfortunately, just half of them have so far (more results in our survey).

5. Advanced data profiling and cleansing

Most data warehouses are structurally complex, with many data sources and targets. At the same time, requirements for transformation are often fairly simple, consisting primarily of lookups and substitutions. If you have a complex transformation requirement, for example, if you need to de-duplicate your customer list, you should buy an additional module on top of the ETL solution (data profiling/data cleansing). At the very least, ETL tools provide a richer set of cleansing functions than those available in SQL. Download the ETL & Data Integration Guide to see how the ETL tools compare on these aspects.

6. Performance

You might be surprised that performance is listed as one of the last under the advantages of the ETL tools. It’s possible to build a high-performance data warehouse whether you use an ETL tool or not. It’s also possible to build an absolute dog of a data warehouse whether you use an ETL tool or not. We’ve never been able to test whether an excellent hand-coded data warehouse outperforms an excellent tool-based data warehouse; we believe the answer is that it’s situational. But the structure imposed by an ETL platform makes it easier for a (novice) ETL developer to build a high-quality system. Furthermore, many ETL tools provide performance-enhancing technologies, such as Massively Parallel Processing, Symmetric Multi-Processing, and Cluster Awareness.

7. Big Data

A lot of ETL tools are now capable of combining structured data with unstructured data in one mapping. In addition, they can handle very large amounts of data that don’t necessarily have to be stored in data warehouses. Nowadays, Hadoop-connectors, or similar interfaces to big data sources, are provided by almost 40% of the ETL tools. And the support for Big Data is growing continually.

Download the ETL & Data Integration Guide: 20+ tools

Get all the information to select the best (enterprise) ETL tooling for the best price by downloading our ETL & Data Integration Guide 2024. You will get a real insight into using ETL tools to build successful ETL applications. You’ll receive the results of comparing all the significant ETL tools/Data Integration solutions across more than 500 criteria.

Which vendors provide open-source ETL?

Most of the vendors listed above are commercial vendors, which means that the software is not for free and that the source code isn’t available for developers. Only Pentaho, Talend, and CloverETL provide an open source ETL tool and on some aspects, they score on par with the commercial offerings.

Should you use open-source ETL tools?

Many organizations, both private and public, are currently evaluating or deploying Open-Source ETL tools like Talend, CloverETL, and Pentaho. These leading open-source ETL suites offer a range of ETL capabilities, ranging from ETL to ad-hoc analysis and reporting.

Adoption increases for open-source ETL tools

On a feature-by-feature comparison, many open-source ETL tools still can’t beat the leading closed-source offerings, but, as a leading analyst firm recently stated in a research paper: open-source adoption increases, because it is often considered ‘good enough’. When cutting-edge functionalities are not of the essence, these offerings provide a robust and complete alternative. The functionality of Pentaho Data Integration (PDI) for example, has increased so rapidly in the last two years that it has become a major competitor for commercial ETL tools.

Open-source ETL tools are worth considering

If you combine the ‘good enough’ factor with an attractive price point and the support delivered by the vendors, open-source ETL is certainly worth considering. In the ETL tools comparison report there is a thorough evaluation of the most common open-source ETL tools listed like Talend, CloverETL, and Pentaho.

Do you want to purchase software from one of these ETL vendors?

Vendor selection can be a time-consuming task. We have assisted organizations for over 10 years in selecting the right vendor and we’ll gladly help you to choose the best ETL solution for your organization. Through our extensive knowledge of the market, we have also been able to support our customers in the negotiation process with vendors.